Hi there,

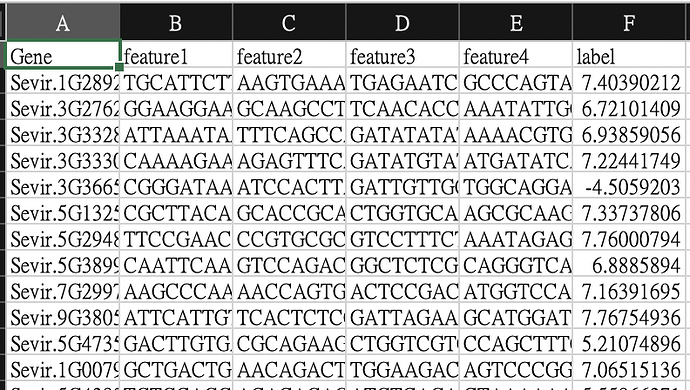

I am new to deep learning and PyTorch and trying to build a simple CNN model to do a regression task on DNA sequence inputs.

Here is my CNN model:

Build CNN

class CNN(nn.Module):

def init(self):

super().init()

self.CNN_conv_layer1 = nn.Conv2d(in_channels=8, out_channels=32, kernel_size=1, stride=2)

#self.CNN_conv_layer2 = nn.Conv2d(16, 32, 1, 2)

self.CNN_max_pool1 = nn.MaxPool2d(2, 2)

self.CNN_conv_layer3 = nn.Conv2d(32, 64, 1, 2)

#self.CNN_conv_layer4 = nn.Conv2d(64, 128, 1, 2)

#self.CNN_max_pool2 = nn.MaxPool2d(2, 2)

# flatten and batch normalization

#self.CNN_flatten = torch.flatten()

self.CNN_BatchNorm = nn.BatchNorm1d(525, momentum=0.5) #263->525

self.CNN_fc1 = nn.Linear(525, 1) #263->525

#self.CNN_relu1 = nn.ReLU()

self.CNN_relu1 = nn.LeakyReLU()

self.CNN_fc2 = nn.Linear(1,1)

#pass data through CNN

def forward(self, x):

output = self.CNN_conv_layer1(x)

#output = self.CNN_conv_layer2(output)

output = self.CNN_max_pool1(output)

output = self.CNN_conv_layer3(output)

#output = self.CNN_conv_layer4(output)

#output = self.CNN_max_pool2(output)

#output = output.reshape(#reshape size, how to define the size to be compatible with next net)

#output = self.CNN_flatten(output, 1)#

output = self.CNN_BatchNorm(output)

output = output.view(output.size(0), -1)

#print(output.size)

output = self.CNN_fc1(output)

output = self.CNN_relu1(output)

output = self.CNN_fc2(output)

return output

And here is my training loop:

def train(net, dataloader, epochs=200, lr=0.0001, momentum=0.9, decay=0.0, verbose=1):

‘’’ Trains a neural network. Returns a 2d numpy array, where every list

represents the losses per epoch.

‘’’

losses_per_epoch = []

criterion = nn.MSELoss()

optimizer = optim.SGD(net.parameters(), lr=lr, weight_decay=decay)

for epoch in range(epochs):

#print(epoch)

sum_loss = 0.0

losses = []

for i, data in enumerate(train_dataloader, 0):

# get the inputs; data is a list of [inputs, labels]

#inputs, labels = data[0].to(device), data[1].to(device)

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

#loss = criterion(outputs, labels.float().unsqueeze(1))

loss.backward()

optimizer.step()

# print statistics

losses.append(loss.item())

sum_loss += loss.item()

if i % 100 == 99: # print every 100 mini-batches

if verbose:

print('[%d, %5d] train loss: %.3f' % (epoch + 1, i + 1, sum_loss / 20))

sum_loss = 0.0

# print(len(losses))

losses_per_epoch.append(np.mean(losses))

return losses_per_epoch

train(net=net, dataloader=train_dataloader)

print(f"Using {device} device")

I got a continuous error:

/usr/local/lib/python3.7/dist-packages/torch/nn/modules/loss.py:529: UserWarning: Using a target size (torch.Size([8])) that is different to the input size (torch.Size([64, 1])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

return F.mse_loss(input, target, reduction=self.reduction)

[1, 100] train loss: 57.159

[1, 200] train loss: 57.104

[1, 300] train loss: 56.298

[1, 400] train loss: 61.532

[1, 500] train loss: 59.678

[1, 600] train loss: 57.460

RuntimeError Traceback (most recent call last)

in ()

37 return losses_per_epoch

38

—> 39 train(net=net, dataloader=train_dataloader)

40 print(f"Using {device} device")

5 frames

/usr/local/lib/python3.7/dist-packages/torch/nn/modules/conv.py in _conv_forward(self, input, weight, bias)

442 _pair(0), self.dilation, self.groups)

443 return F.conv2d(input, weight, bias, self.stride,

→ 444 self.padding, self.dilation, self.groups)

445

446 def forward(self, input: Tensor) → Tensor:

RuntimeError: Given groups=1, weight of size [32, 8, 1, 1], expected input[1, 7, 4200, 4] to have 8 channels, but got 7 channels instead

When I change the input channels to 7, another error will be dropped as:

RuntimeError: Given groups=1, weight of size [32, 7, 1, 1], expected input[1, 8, 4200, 4] to have 7 channels, but got 8 channels instead

I am totally confused and have no clues to fix it.

May I ask if anyone can help me on this?

Thank you!!!

Best regards,

Chao