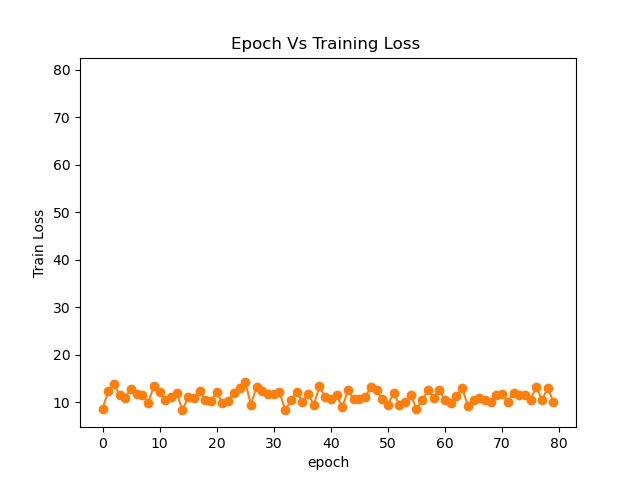

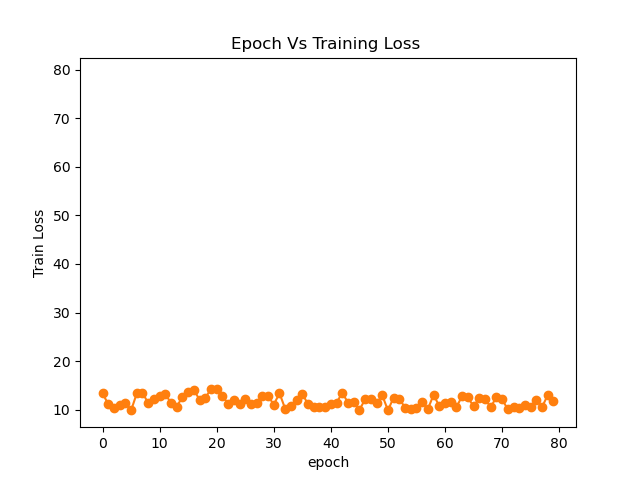

My CNN-based deep learning model is fluctuating in validation accuracy at certain epochs. I learned the deep learning model from frequency domain images. Do I need to normalize or not these iift2 frequency images ? And then, the training loss does not decrease and is stable. My training dataset is 500 images.

and I tried 80 epochs. The training loss and validation accuracy are still stuck in epoch 20. There are no improvements. I have already tried tuning hyperparameters such as learning rate, batch size. I also added regularization parameters such as weight decay.

Do you have a specific question? It’s normal for any metric to fluctuate from epoch to epoch, and the extent of the fluctuation is much greater when the validation set is small (as is yours).

It’s entirely possible, given such a small dataset, that validation loss plateaus after 20 epochs.

If you have 500 images only, try fine-tuning a pre-trained model. Learning a model from scratch is a bad idea.

I am testing Image denoising research. My training dataset is 500 images and testing dataset is 12 images. I think, it is normal to test image denoising research. My training loss does not decrease.

I also train 2/3 training and 1/3 validation set. However, the training loss does not decrease. ![]()