Dear All,

I created a minimal example here to reproduce the problem I am facing.

I have a data set with 21 features (e.g covariates) and 108405 rows, e.g.:

torch.Size([108405, 21])

I am interested in using a CNN, for this problem and I have the following NN architecture:

Net2 (

(l1): Sequential (

(0): Linear (21 -> 126)

(1): Dropout (p = 0.15)

(2): LeakyReLU (0.1)

(3): BatchNorm1d(126, eps=1e-05, momentum=0.1, affine=True)

)

(c1): Sequential (

(0): Conv1d(21, 126, kernel_size=(3,), stride=(1,), padding=(1,))

(1): Dropout (p = 0.25)

(2): LeakyReLU (0.1)

(3): BatchNorm1d(126, eps=1e-05, momentum=0.1, affine=True)

)

(out): Sequential (

(0): Linear (756 -> 1)

)

(sig): Sigmoid ()

)

Now, I would like to eliminate the first Linear (21 -> 126) layer:

The only reason it is there is because I was unable to correctly shape my input to feed directly into the CNN that follows, e.g.:

Is this possible? How can I reshape my x_tensor so that I t can be fed directly into the Conv1d layer?

This is the full network:

# References:

# https://github.com/vinhkhuc/PyTorch-Mini-Tutorials/blob/master/5_convolutional_net.py

# https://gist.github.com/spro/c87cc706625b8a54e604fb1024106556

X_tensor_train= XnumpyToTensor(trainX) # default order is NBC for a 3d tensor, but we have a 2d tensor

X_shape=X_tensor_train.data.size()

# Dimensions

# Number of features for the input layer

N_FEATURES=trainX.shape[1]

# Number of rows

NUM_ROWS_TRAINNING=trainX.shape[0]

# this number has no meaning except for being divisable by 2

N_MULT_FACTOR=6 # min should be 4

# Size of first linear layer

N_HIDDEN=N_FEATURES * N_MULT_FACTOR

# CNN kernel size

N_CNN_KERNEL=3

DEBUG_ON=True

def debug(msg, x):

if DEBUG_ON:

print (msg + ', (size():' + str (x.size()))

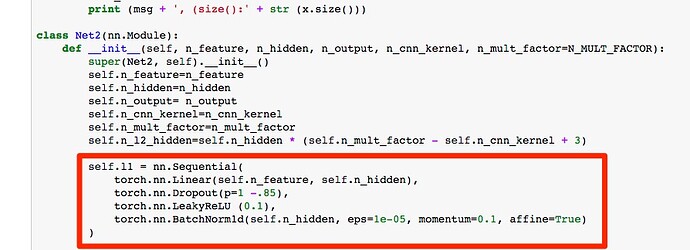

class Net2(nn.Module):

def __init__(self, n_feature, n_hidden, n_output, n_cnn_kernel, n_mult_factor=N_MULT_FACTOR):

super(Net2, self).__init__()

self.n_feature=n_feature

self.n_hidden=n_hidden

self.n_output= n_output

self.n_cnn_kernel=n_cnn_kernel

self.n_mult_factor=n_mult_factor

self.n_l2_hidden=self.n_hidden * (self.n_mult_factor - self.n_cnn_kernel + 3)

self.l1 = nn.Sequential(

torch.nn.Linear(self.n_feature, self.n_hidden),

torch.nn.Dropout(p=1 -.85),

torch.nn.LeakyReLU (0.1),

torch.nn.BatchNorm1d(self.n_hidden, eps=1e-05, momentum=0.1, affine=True)

)

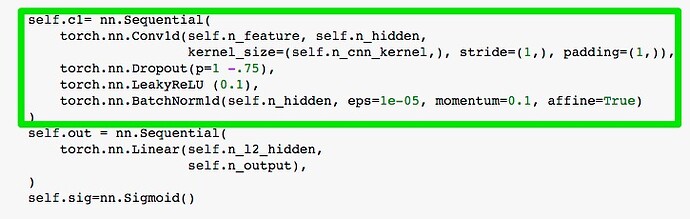

self.c1= nn.Sequential(

torch.nn.Conv1d(self.n_feature, self.n_hidden,

kernel_size=(self.n_cnn_kernel,), stride=(1,), padding=(1,)),

torch.nn.Dropout(p=1 -.75),

torch.nn.LeakyReLU (0.1),

torch.nn.BatchNorm1d(self.n_hidden, eps=1e-05, momentum=0.1, affine=True)

)

self.out = nn.Sequential(

torch.nn.Linear(self.n_l2_hidden,

self.n_output),

)

self.sig=nn.Sigmoid()

def forward(self, x):

debug('raw',x)

varSize=x.data.shape[0] # must be calculated here in forward() since its is a dynamic size

x=self.l1(x)

debug('after lin',x)

# for CNN

x = x.view(varSize,self.n_feature,self.n_mult_factor)

debug('after view',x)

x=self.c1(x)

debug('after CNN',x)

# for Linear layer

x = x.view(varSize, self.n_hidden * (self.n_mult_factor -self.n_cnn_kernel + 3))

debug('after 2nd view',x)

# x=self.l2(x)

x=self.out(x)

debug('after self.out',x)

x=self.sig(x)

return x

net = Net2(n_feature=N_FEATURES, n_hidden=N_HIDDEN, n_output=1, n_cnn_kernel=N_CNN_KERNEL)

if use_cuda:

net=net.cuda()

lgr.info(net)

b = net(X_tensor_train)

print ('(b.size():' + str (b.size()))

Output:

(108405, 21)

<type 'numpy.ndarray'>

<class 'torch.cuda.FloatTensor'>

torch.Size([108405, 21])

raw, (size():torch.Size([108405, 21])

after lin, (size():torch.Size([108405, 126])

after view, (size():torch.Size([108405, 21, 6])

after CNN, (size():torch.Size([108405, 126, 6])

after 2nd view, (size():torch.Size([108405, 756])

after self.out, (size():torch.Size([108405, 1])

(b.size():torch.Size([108405, 1])

Many thanks,