Hi all, I’m using the nll_loss function in conjunction with log_softmax as advised in the documentation when creating a CNN. However, when I test new images, I get negative numbers rather than 0-1 limited results. This is really strange given the bound nature of the softmax function and I was wondering if anyone has encountered this problem or can see where I’m going wrong?

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torchvision.datasets as dset

import torchvision.transforms as transforms

import torchvision

import matplotlib.pyplot as plt

import numpy as np

transform = transforms.Compose(

[transforms.Resize((32,32)),

transforms.ToTensor(),

])

trainset = dset.ImageFolder(root="Image_data",transform=transform)

train_loader = torch.utils.data.DataLoader(trainset, batch_size=10,shuffle=True)

testset = dset.ImageFolder(root='tests',transform=transform)

test_loader = torch.utils.data.DataLoader(testset, batch_size=10,shuffle=True)

classes=('Cats','Dogs')

def imshow(img):

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

dataiter = iter(train_loader)

images, labels = dataiter.next()

imshow(torchvision.utils.make_grid(images))

plt.show()

dataiter = iter(test_loader)

images, labels = dataiter.next()

imshow(torchvision.utils.make_grid(images))

plt.show()

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv2d(3,32,5,padding=2) # 1 input, 32 out, filter size = 5x5, 2 block outer padding

self.conv2 = nn.Conv2d(32,64,5,padding=2) # 32 input, 64 out, filter size = 5x5, 2 block padding

self.fc1 = nn.Linear(64*8*8,1024) # Fully connected layer

self.fc2 = nn.Linear(1024,2) #Fully connected layer 2 out.

def forward(self,x):

x = F.max_pool2d(F.relu(self.conv1(x)), 2) # Max pool over convolution with 2x2 pooling

x = F.max_pool2d(F.relu(self.conv2(x)), 2) # Max pool over convolution with 2x2 pooling

x = x.view(-1,64*8*8) # tensor.view() reshapes the tensor

x = F.relu(self.fc1(x)) # Activation function after passing through fully connected layer

x = F.dropout(x, training=True) #Dropout regularisation

x = self.fc2(x) # Pass through final fully connected layer

return F.log_softmax(x,dim=1) # Give results using softmax

model = Net()

print(model)

optimizer = optim.Adam(model.parameters(), lr=0.0001)

model.train()

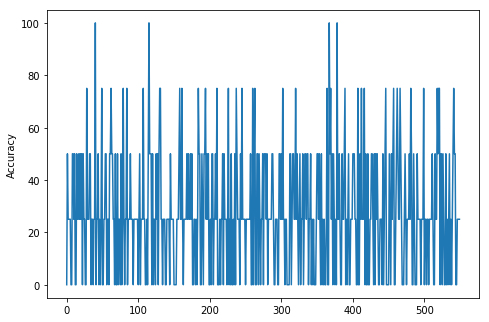

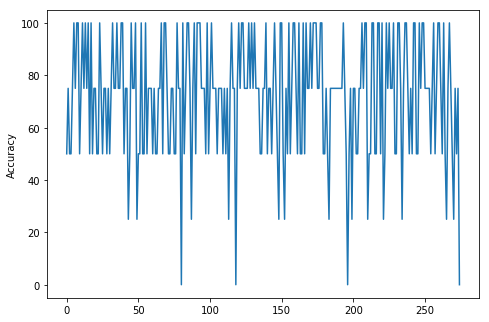

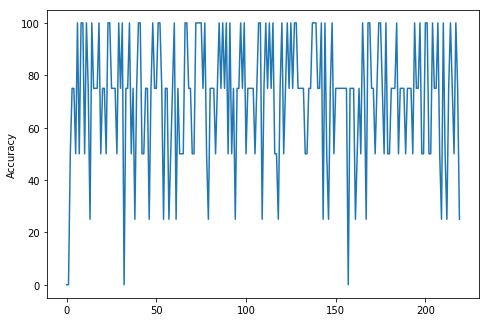

train_loss = []

train_accu = []

i = 0

batch_size = 10

for epoch in range(10):

for data, target in train_loader:

data, target = Variable(data), Variable(target)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target) # Negative log likelihood (goes with softmax).

loss.backward() # calc gradients

train_loss.append(loss.data[0]) # Calculating the loss

optimizer.step() # update gradients

prediction = output.data.max(1)[1] # first column has actual prob.

accuracy = (prediction.eq(target.data).sum()/batch_size)*100

train_accu.append(accuracy)

if i % 10 == 0:

print('Epoch:',str(epoch),'Train Step: {}\tLoss: {:.3f}\tAccuracy: {:.3f}'.format(i, loss.data[0], accuracy))

i += 1

from PIL import Image

loader = transform

def image_loader(image_name):

image = Image.open(image_name)

image = loader(image).float()

image = Variable(image, requires_grad=True)

image = image.unsqueeze(0) #this is for VGG, may not be needed for ResNet

return image

image = image_loader('cat_test.jpg')

image2 = image_loader('dog_test.jpg')

prediction = model(image)

print(prediction)

prediction = model(image2)

print(prediction)

Many thanks in advance!

but I think I get that what you’re saying is your output will be say 10 by 2 if you have 10 training images in a batch, but when you test a single image it will come out at 1 by 2?

but I think I get that what you’re saying is your output will be say 10 by 2 if you have 10 training images in a batch, but when you test a single image it will come out at 1 by 2?