I am working on semantic segmentation of electric motors. My masks contain 7 classes (including background) encoded as RGB colors.

I use the following code which is based on this post:

import torch

import numpy as np

import cv2

import matplotlib.pyplot as plt

h=256

w=256

#Loading mask

mask_img = cv2.imread('input/labels/1.png')

mask_img=cv2.cvtColor(mask_img, cv2.COLOR_BGR2RGB)

#Color codes

colors=[(255 ,0, 0),

(0,0,255),

(0,255,0),

(255,0,255),

(0,255,255),

(255,255,255),

(0,0,0)]

mapping = {tuple(c): t for c, t in zip(colors, range(len(colors)))}

mask = torch.empty(h, w, dtype=torch.long)

target = mask_img

target=torch.from_numpy(target)

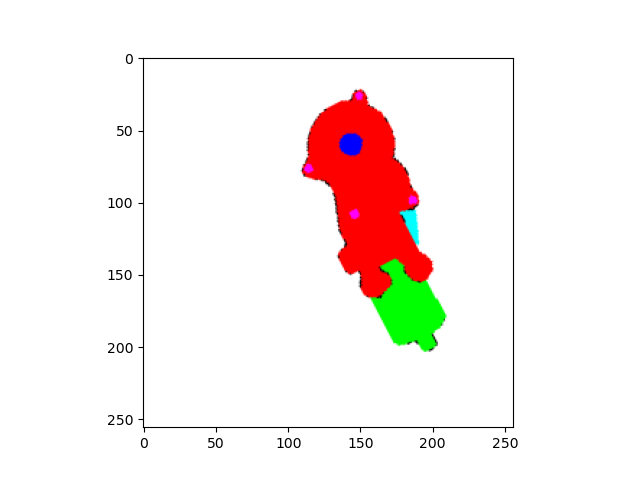

plt.imshow(target)

plt.pause(1)

target = target.permute(2, 0, 1)

for k in mapping:

idx = (target==torch.tensor(k, dtype=torch.uint8).unsqueeze(1).unsqueeze(2))

validx = (idx.sum(0) == 3)

mask[validx] = torch.tensor(mapping[k], dtype=torch.long)

print('unique values mapped ', torch.unique(mask))

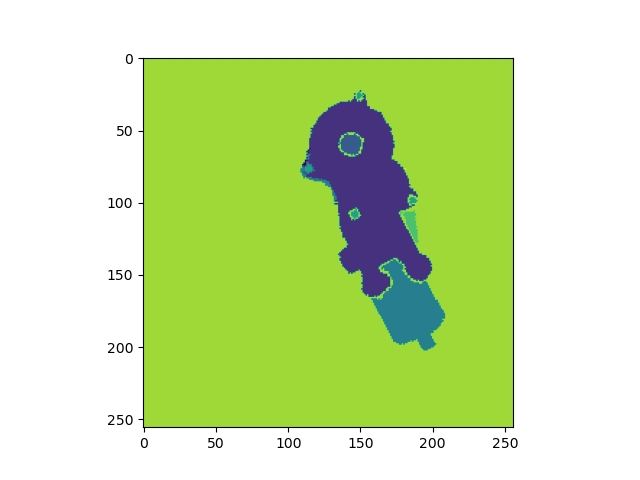

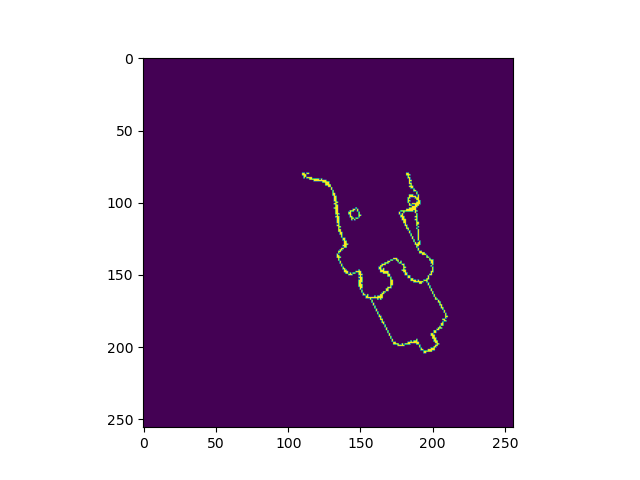

plt.imshow(mask)

plt.pause(0.1)

When I run the code the result should look like this:

>>>unique values mapped tensor([0, 1, 2, 3, 4, 5, 6])

But often the mapping is messed up. For example:

>>>unique values mapped tensor([ 0, 1, 2,

3, 4, 5,

6, 4575657222473777152])

This example is at least similar to the desired result, only a class indize was added. But there are also completely strange results with hundreds of class indices.

This happens without me changing the code or the input image. I do nothing but execute the same code again. Can someone explain to me why this happens? Where do the additional classes come from?