Hi everyone!

I have been facing a weird behaviour in memory consumption when building a GStreamer element that combines PyTorch and CUDA.

First, I constructed an element that uses NVMM buffers and I do some computation with CUDA on the incoming buffers in place. It was working like a charm. Even, I was able to place up to six parallel pipelines that use the element without any issue more than the actual GPU usage. However, I wanted to add some object detection functionality to the pipeline and tried to integrate PyTorch into the element. When I just link Torch library to the element, the memory consumption hugely increases, and the pipelines consume ~1.2GB each (it was less than 100MB before).

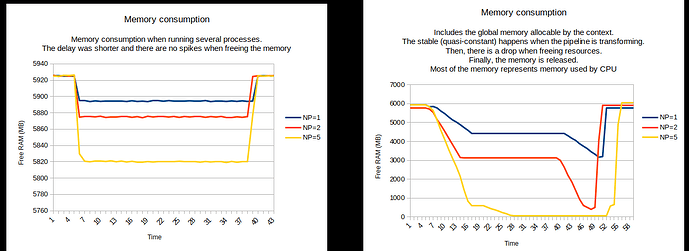

The memory consumption pattern is like follows (NP=Number of Processes):

Without Torch (left) and with Torch linked-in (right):

In the last part, where there is a spike in memory consumption, it happens when releasing the resources.

So far, the experiment is just adding Torch as a dependency in the building system. I am not adding any code (even headers).

For reproducing the issue, I have prepared a toy code where we are able to reproduce the behaviour. In this toy code, I have prepared a couple of pass-thru elements (torchpassthru, cudapassthru): one with Torch linked-in (just that), and another one that uses a similar initialisation/termination as the CUDA code we are using.

The issue can be reproduced by doing:

gst-launch-1.0 videotestsrc ! "video/x-raw,width=640,height=480" ! cudapassthru ! torchpassthru ! fakesink silent=false -vvv

where the memory consumption only has a spike when closing the pipeline:

gst-launch-1.0 videotestsrc ! "video/x-raw,width=640,height=480" ! torchpassthru ! cudapassthru ! fakesink silent=false -vvv

where the memory consumption increases from tens of MB to one GB. There is also a spike when closing the pipeline.

The memory scales linearly just by placing more cudapassthru elements. In other words, by placing one, the consumption increases by 1 GB, placing two, it increases to 2GB, and so on. This behaviour does not happen when we only have cudapassthru elements, meaning that the memory keeps in the other of tens of MB. Also, the memory consumption issue does not happen when having the Torch element alone.

I also wanted to mention that this behaviour does not happen when having the pipelines in multiple processes. For example:

# Terminal 1

gst-launch-1.0 videotestsrc ! "video/x-raw,width=640,height=480" ! torchpassthru ! fakesink silent=false -vvv

# Terminal 2

gst-launch-1.0 videotestsrc ! "video/x-raw,width=640,height=480" ! cudapassthru ! cudapassthru ! cudapassthru ! fakesink silent=false -vvv

I suspect that it can be something related to CUDA contexts. If so:

- is there a way to make PyTorch and CUDA share the context in some way?

In the beginning, I thought it was a PyTorch issue. However, looking at how the memory scales when I add more elements, I started to suspect that there is something else.

- any clue about why this behaviour can happen in CUDA?

I am using PyTorch 1.8 (from NVIDIA) on a Jetson TX2 with JP 4.5.1. The GStreamer version is the default one (1.14.x).

Thanks in advance for any clue you can provide about this issue.

This is the link to the toy code: download