Hello,

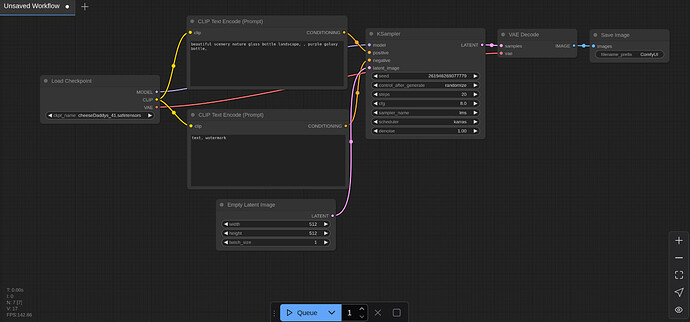

I’m trying to run Comfy_UI on my RX 7900 TX. I tried installing Rocm and the nightly build of pytorch as per the pytorch installation guide on the official website in a Fedora distrobox, and I also tried using the pre-built ubuntu+rocm+pytorch docker image from AMD’s site (PyTorch on ROCm — ROCm installation (Linux)). Both cases Comfy_UI starts up as normal but as soon as I queue an operation, its shuts down with the error “Attempting to use hipBLASLt on a unsupported architecture!”

From what I’ve read this issue on gfx1100 cards was supposed to be fixed in pytorch 2.5.1 somewhere around Oct 2024. Just to see if it was Rocm that was causing the problem, I updated the the the AMD pre-built container to Rocm-6.3 from 6.2.4 but nothing changed. I don’t know what should be my next step troubleshooting, especially since the AMD assembled and tested container does not work either.

Please Help.

Host system:

Operating System: Debian GNU/Linux 12

KDE Plasma Version: 6.2.5

KDE Frameworks Version: 6.10.0

Qt Version: 6.7.2

Kernel Version: 6.12.9-amd64 (64-bit)

Graphics Platform: Wayland

Processors: 24 × AMD Ryzen 9 7900X 12-Core Processor

Memory: 61.9 GiB of RAM

Graphics Processor: AMD Radeon RX 7900 XT

Manufacturer: ASUS

Confy_ui output on the AMD pre-buit container

Total VRAM 20464 MB, total RAM 63432 MB

pytorch version: 2.6.0.dev20241122+rocm6.2

Set vram state to: NORMAL_VRAM

Device: cuda:0 Radeon RX 7900 XT : native

Using sub quadratic optimization for attention, if you have memory or speed issues try using: --use-split-cross-attention

[Prompt Server] web root: /home/sersys/pyproj/ComfyUI-0.3.12/web

Import times for custom nodes:

0.0 seconds: /home/sersys/pyproj/ComfyUI-0.3.12/custom_nodes/websocket_image_save.py

Starting server

To see the GUI go to: http://127.0.0.1:8188

got prompt

model weight dtype torch.float16, manual cast: None

model_type EPS

Using split attention in VAE

Using split attention in VAE

VAE load device: cuda:0, offload device: cpu, dtype: torch.float32

CLIP/text encoder model load device: cuda:0, offload device: cpu, current: cpu, dtype: torch.float16

Requested to load SDXLClipModel

loaded completely 9.5367431640625e+25 1560.802734375 True

/home/sersys/pyproj/ComfyUI-0.3.12/comfy/ops.py:64: UserWarning: Attempting to use hipBLASLt on an unsupported architecture! Overriding blas backend to hipblas (Triggered internally at /pytorch/aten/src/ATen/Context.cpp:296.)

return torch.nn.functional.linear(input, weight, bias)

Requested to load SDXL

loaded completely 9.5367431640625e+25 4897.0483474731445 True

0%| | 0/20 [00:00<?, ?it/s]:0:rocdevice.cpp :2984: 93862815501 us: [pid:318562 tid:0x7f122e5ff640] Callback: Queue 0x7f0ed8000000 aborting with error : HSA_STATUS_ERROR_OUT_OF_REGISTERS: Kernel has requested more VGPRs than are available on this agent code: 0x2d

Aborted (core dumped)