Hi everyone!

I am trying to replicate the examples shown in this paper but I’m having some doubts whether my code is correct.

The basic idea is that we want to model some function, for which we have a few coordinates of high fidelity approximations and then some more low fidelity points.

To do so, the authors use a composite neural network structured as follows:

- A low fidelity neural network NN_L, that takes the input and outputs the low fidelity prediction. This is a non-linear neural network with Tanh as the activation function

- A linear high fidelity neural network NN_H1, that takes as inputs the original input and the associated low fidelity prediction and outputs F_L. This should be a linear neural network with no activation function, so I’m using the torch.nn.Identity activation function

- A non-linear high fidelity neural network NN_H2, that takes as inputs the original input and the associated low fidelity prediction and outputs F_NL. This neural network uses a Tanh activation function.

The sum of F_L+F_NL is the high fidelity prediction.

Here comes the problem: in the paper they do not use any hidden layer in NN_H1, which makes sense to me as it is a linear layer. Also they use L-BFGS, while I’m using Adam, but I don’t think that’s the problem here as I get similar results using L-BFGS too.

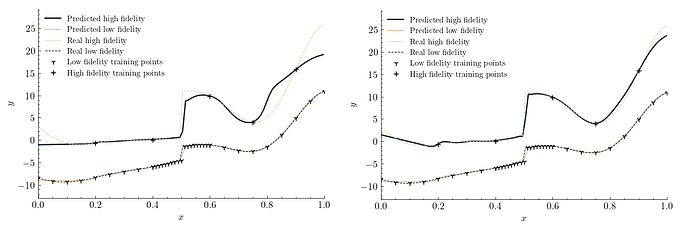

If I use no hidden layers in NN_H1, I can never seem to get an accurate prediction, regardless of how long and which learning rate/weight decay I use, see the figure below:

If I add 2 hidden layers with 20 neurons, I get a much better result:

The question is: am I creating the neural network correctly? Am I misunderstanding something? Maybe the problem lies elsewhere? I am creating the networks as follows:

import torch

class BasicBlock(torch.nn.Module):

"""

Single block for a fully connected layer. Alternates a linear layer and an activation function.

"""

def __init__(self, input_features: int, output_features: int, activation: type[torch.nn.Module]):

super().__init__()

self.linear_layer = torch.nn.Linear(input_features, output_features)

self.activation = activation()

def forward(self, x):

x_a = self.linear_layer(x)

out = self.activation(x_a)

return out

class FCNN(torch.nn.Module):

"""

Fully connected Neural Network template. Creates a Fully Connected NN with specified features and activation function

"""

def __init__(self, in_features: int, out_features: int, mid_features: list[int], activation: type[torch.nn.Module]):

super().__init__()

layer_sizes = [in_features] + mid_features

"""

Creates the inner layers by calling the BasicBlock (1 linear + 1 activation) for as many times as there are hidden layers (len(mid_features))

"""

self.layers = torch.nn.Sequential(*[

BasicBlock(layer_sizes[i], layer_sizes[i+1], activation)

for i in range(len(mid_features))

])

self.fc = torch.nn.Linear(layer_sizes[-1], out_features)

def forward(self, x: torch.Tensor) -> torch.Tensor:

x_a = self.layers(x)

out = self.fc(x_a)

return out

class MFNN(torch.nn.Module):

"""

A multi fidelity neural network class. This creates two subclasses: fc_low is the low fidelity part of the model

fc_high is the high fidelity. fc_high takes two inputs (the x coordinate and the relative low-fidelity

prediction).

in_features: number of features given as input

out_features: number of features computed by the COMPLETE model

lf_layers: a list of the layer widths of the low fidelity layers

hf1_layers: a list of the layer widths of the NNH1 layers

hf2_layers: a list of the layer widths of the NNH2 layers

"""

def __init__(self, in_features: int, out_features: int, lf_layers: list[int], hf1_layers: list[int],

hf2_layers: list[int], lf_activation: type[torch.nn.Module], hf_activation: type[torch.nn.Module]

):

super().__init__()

# Creates the list of the layer sizes for the various fidelity

lf_layer_sizes = [in_features] + lf_layers

# We now create two different neural networks, for the NNlow and NNhigh

self.fc_low = FCNN(lf_layer_sizes[0], out_features, lf_layers, lf_activation)

self.fc_high1 = FCNN(2, out_features, hf1_layers, activation=torch.nn.Identity)

self.fc_high2 = FCNN(2, out_features, hf2_layers, hf_activation)

def forward(self, x: torch.Tensor):

low_features = self.fc_low(x)

in_h = torch.cat([x, low_features], dim=1)

y1 = self.fc_high1(in_h)

y2 = self.fc_high2(in_h)

y = y1 + y2

return y

low_fidelity_layers = [20, 20]

high_fidelity1_layers = [20, 20] # Adds 2 hidden layers

high_fidelity2_layers = [20, 20, 20, 20]

# Create the model with 2 hidden layers

mf_model = MFNN(1, 1, lf_layers=low_fidelity_layers,

hf1_layers=high_fidelity1_layers, hf2_layers=high_fidelity2_layers,

lf_activation=torch.nn.Tanh, hf_activation=torch.nn.Tanh)

# Create the model with no hidden layers

mf_model_nh = MFNN(1, 1, lf_layers=low_fidelity_layers,

hf1_layers=[], hf2_layers=high_fidelity2_layers,

lf_activation=torch.nn.Tanh, hf_activation=torch.nn.Tanh)

Thanks in advance!