Hello,

I have an excel file that contains two columns, “input” and “label” (examples of the file are in the blow). I want to implement a regression task, and I need to implement the below lines of code in the k-fold cross validation:

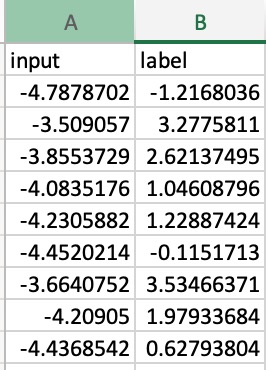

Some examples of the dataset:

with torch.no_grad():

for data in testloader:

images, labels = data

# calculate outputs by running images through the network

outputs = net(images)

# the class with the highest energy is what we choose as prediction

_, predicted = torch.max(outputs.detach(), 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'Accuracy of the network on the 10000 test images: {100 * correct // total} %')

I think since it is not a classification problem, it is not correct to use this line in the code:

correct += (predicted == labels).sum().item()

Could you please let me know how I can change the codes to get accuracy in this scenario?