Hello everyone. I am using a HuggingFace model, to which I pass a couple of sentences. Then I am getting the logits, and using PyTorch’s CrossEntropyLoss, to get the loss. The problem is as follows:

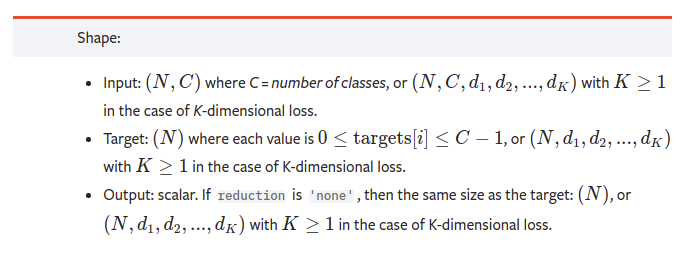

I want the loss of each sentence. If I have 3 sentences, each with 10 tokens, the logits have size [3, 10, V], where V is my vocab size. The labels have size [3, 10], basically the correct labels for each of the 10 tokens in each sentence.

How can I get the cross entropy of each sentence then? If I do reduction='mean', I am going to get the overall mean loss (1 number). If I use reduction='none', then I get one number for each token, so basically the loss of each single token. The code I am using is

loss_fct = nn.CrossEntropyLoss(reduction=‘mean’) # or ‘none’

masked_lm_loss = loss_fct(outputs.logits.cpu().detach().view(-1, V), target_ids.view(-1))

Perhaps I need to define the views differently in the second line?

Thanks in advance.