Hello,

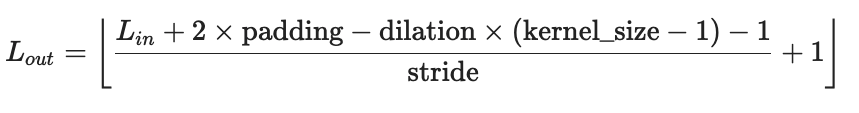

I am translating some Keras code into PyTorch. Unfortunately I’m finding it hard to compute the input size for the fully connected layer in my multi-layer CNN. I understand that PyTorch expects this to be passed explicitly and that the docs for nn.Conv1d and nn.MaxPool1d provide formulas to compute the input size. Would someone be willing to check that I’ve implemented these formulas correctly? Note that kernel_sizes is a list of kernel sizes for each nn.Conv1d layer. Thanks very much for any assistance.

class DeepCNN(nn.Module):

def __init__(

self,

n_classes,

max_seq_len,

embedding_matrix,

kernel_sizes,

conv_dropout_rates,

n_fc_neurons_l0,

n_fc_neurons_l1,

fc_dropout_rate_l0,

fc_dropout_rate_l1,

pad_idx

):

super(DeepCNN, self).__init__()

layers, drops, pools = [], [], []

embedding_dim = embedding_matrix.shape[1]

# Embedding layer

self.embedding = nn.Embedding.from_pretrained(

embeddings=embedding_matrix,

freeze=False,

padding_idx=pad_idx,

max_norm=None,

norm_type=2,

scale_grad_by_freq=False,

sparse=False

)

# Conv layer 0

layers.append(

nn.Conv1d(

in_channels=embedding_dim,

out_channels=embedding_dim,

kernel_size=kernel_sizes[0],

stride=1,

padding=0

)

)

drops.append(

nn.Dropout(p=conv_dropout_rates[0])

)

pools.append(

nn.MaxPool1d(

kernel_size=2,

stride=None,

padding=0

)

)

# Conv layer 1

layers.append(

nn.Conv1d(

in_channels=embedding_dim,

out_channels=2 * embedding_dim,

kernel_size=kernel_sizes[1],

stride=1,

padding=0

)

)

drops.append(

nn.Dropout(p=conv_dropout_rates[1])

)

pools.append(

nn.MaxPool1d(

kernel_size=2,

stride=None,

padding=0

)

)

# Conv layer 2

layers.append(

nn.Conv1d(

in_channels=2 * embedding_dim,

out_channels=2 * embedding_dim,

kernel_size=kernel_sizes[2],

stride=1,

padding=0

)

)

drops.append(

nn.Dropout(p=conv_dropout_rates[2])

)

pools.append(

nn.MaxPool1d(

kernel_size=2,

stride=None,

padding=0

)

)

# Set conv layers with dropout and pooling

self.layers = layers

self.drops = drops

self.pools = pools

# Set fc layers with dropout

self.fc0 = nn.Linear(self.num_feat(embedding_dim, kernel_sizes) * 2 * embedding_dim, n_fc_neurons_l0)

self.drop0 = nn.Dropout(p=fc_dropout_rate_l0)

self.fc1 = nn.Linear(n_fc_neurons_l0, n_fc_neurons_l1)

self.drop1 = nn.Dropout(p=fc_dropout_rate_l1)

self.output = nn.Linear(n_fc_neurons_l1, n_classes)

def num_feat(self, embedding_dim, kernel_sizes):

padding = 0

stride = 1

dilation = 1

max_pool_kernel_size = 2

out_conv_0 = math.floor(((embedding_dim + 2 * padding - dilation * (kernel_sizes[0] - 1) - 1) / stride) + 1)

out_pool_0 = math.floor(((out_conv_0 + 2 * padding - dilation * (max_pool_kernel_size - 1) - 1) / stride) + 1)

out_conv_1 = math.floor(((embedding_dim + 2 * padding - dilation * (kernel_sizes[1] - 1) - 1) / stride) + 1)

out_pool_1 = math.floor(((out_conv_1 + 2 * padding - dilation * (max_pool_kernel_size - 1) - 1) / stride) + 1)

out_conv_2 = math.floor(((2 * embedding_dim + 2 * padding - dilation * (kernel_sizes[2] - 1) - 1) / stride) + 1)

out_pool_2 = math.floor(((out_conv_2 + 2 * padding - dilation * (max_pool_kernel_size - 1) - 1) / stride) + 1)

return out_pool_2

def forward(self, input):

# Get embeddings from input tokens

# Output shape: (batch_size, max_seq_len, embedding_dim)

x = self.embedding(input)

# Permute embedding output to match input shape requirement of nn.Conv1d

# Output shape: (batch_size, embedding_dim, max_seq_len)

x = x.transpose(1, 2)

# Run through CNN layers

for layer, drop, pool in zip(self.layers, self.drops, self.pools):

x = F.relu(layer(x))

x = drop(x)

x = pool(x)

# Convert from (batch size, filters, window size) to (batch size, filters * window size)

x = x.view(x.size(0), -1)

# Trying to compute and pass this input shape in the num_feat function to the fc layers

print(x.shape[1])

# Run through fully connected layers

x = F.relu(self.fc0(x))

x = self.drop0(x)

x = F.relu(self.fc1(x))

x = self.drop1(x)

logits = self.output(x)

# Convert to class probabilities

probs = torch.sigmoid(logits)

return probs