Hi,

I have a four-GPU group where all GPUs form a process group in torch. GPU0 and GPU1 and simultaneously send data to GPU2 and GPU3 respectively. However, only the communication between GPU0 and GPU2 is ok, the communication between GPU1 and GPU3 always fails.

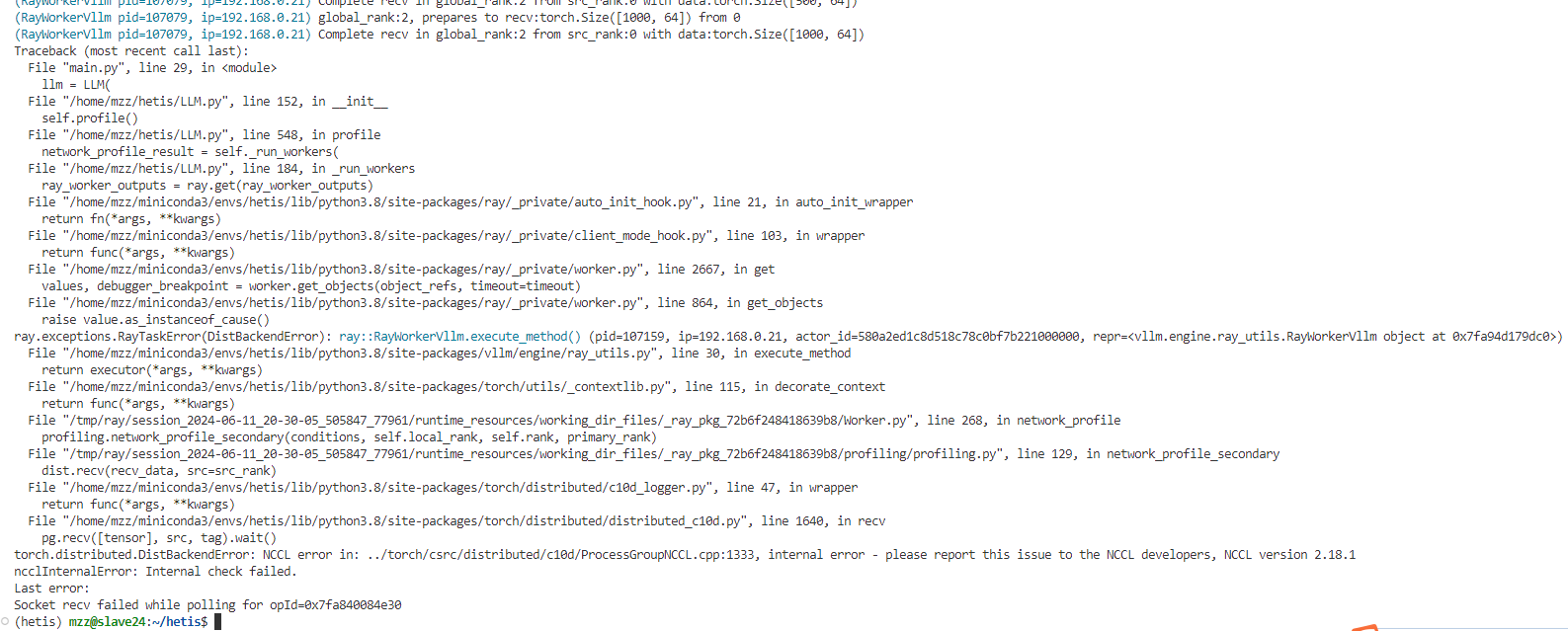

The traceback is as follows:

File “/tmp/ray/sesion 2024-86-1 20-30-85 58847 7961/runtime resourceshorking dir files/ raypkg 72b6f248418639b8/profiling/profilingpy”, line 129, in nehork profile secondar

dist.recv(recv data, src=src rank)

File “/home/mzz/miniconda3/envs/hetis/lib/python3.8/site-packages/torch/distributed/c10d logger.py”, line 47, in wrappe

return func(*args,**kwargs)

File “/home/mzz/miniconda3/envs/hetis/lib/python3.8/site-packages/torch/distributed/distributed c1ed.py”, line 1648, in recu

pg.recv([tensorl,src,tag).wait()

torch.distributed.Distbackenderon: NcL eror in: …/torch/csnc/distributed/cdled/procesGroupcl.cp:13, intemnal eror - please report this isue to the icl dewvelopers, Ncl version 2.18.1

ncclInternalError:Internal check failed.

Last error:

Socket recv failed while polling for opId=0x7fa840084e30

I would like to know if it is because torch/nccl restricts that only one p2p communication is allowed at any time?

BR