I’m trying to write a model that will try to approximate the underlying function behind some data (I have a lot of x,y pairs).

Here is the model architecture:

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.fc1 = Linear(1, 50)

self.fc2 = Linear(50, 1)

def forward(self, x):

sigmoid = torch.nn.Sigmoid()

x = self.fc1(x)

x = sigmoid(x)

x = self.fc2(x)

return x

model = Net()

The X values are in the numpy array X = [[366922500], [696530521], …], the Y values are in a numpy array “res” formatted res = [[0.0], [-652.2099712329232],…].

And here is how I am attempting to training it:

# parameters

num_epochs = 200

learning_rate = 0.1

# define the loss function

critereon = L1Loss()

# define the optimizer

optimizer = Rprop(model.parameters(), lr=learning_rate)

predictions = []

# training loop

for epoch in range(num_epochs):

epoch_loss = 0

predictions = []

for ix in range(len(X)):

y_pred = model(Variable(Tensor(X[ix])))

# print(X[ix], res[ix], y_pred.item())

predictions.append(y_pred.item())

loss = critereon(y_pred, Variable(Tensor(res[ix])))

epoch_loss += loss.data.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

epoch_loss = epoch_loss/len(X)

print("Epoch: {} Loss: {}".format(epoch, epoch_loss))

plt.plot(X,predictions)

plt.plot(X,res)

plt.show()

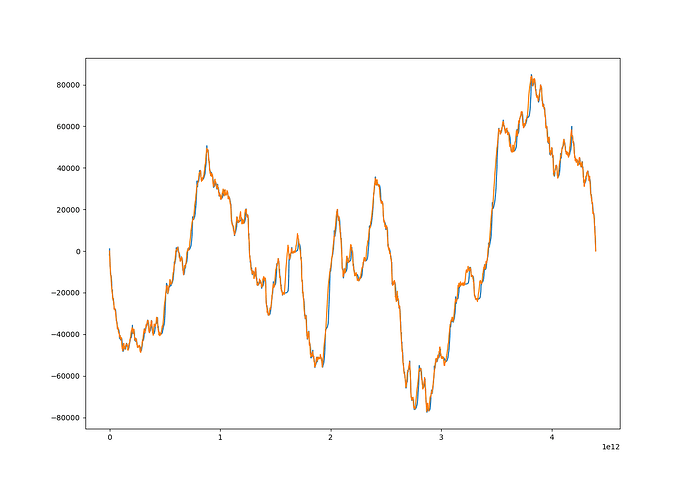

The final three lines of that snippet are so I can see how the predictions during the final training loop compare to the true Y values. Orange are the true values, blue are the predictions.

The loss values decrease over the course of training, and the predictions do improve after each round of training.

Now, I am trying to predict using this model, doing the following:

preds = []

for idx in range(len(X)):

pred = model(Variable(Tensor(X[idx])))

print(X[idx], res[idx], pred.item())

preds.append(pred.item())

plt.plot(X,preds)

plt.plot(X,res)

plt.show()

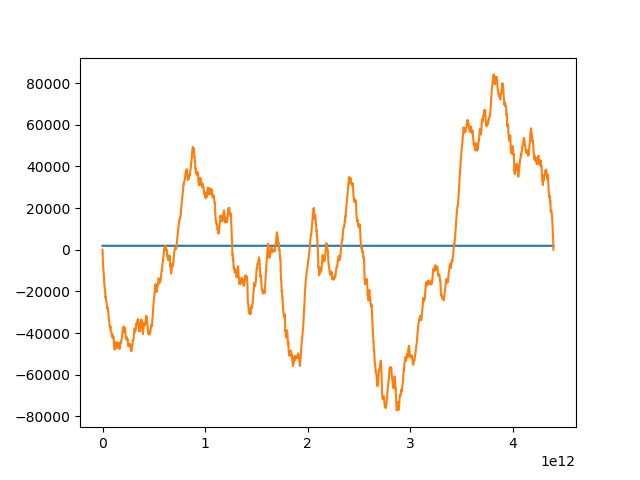

However, when I do this, the model just predicts one value for the entirety of the X values, resulting in this plot:

Does anyone have any idea why this is happening? During training, the predictions seems to be correct, but after training, the model just predicts one value for all the inputs. These are the same inputs it was trained on, and the same inputs for the first plot, so I have no idea what is happening.