When using the following tensors:

prediction = torch.randn(3, 5)

# tensor([[ 1.0732, 1.0451, 0.6778, 1.9992, 0.2216],

# [-0.1783, 0.7814, 0.3577, -0.8458, -0.1871],

# [ 0.7581, 1.2827, 0.2930, -1.0155, -1.0184]])

target = torch.randn(3, 5)

# tensor([[-1.1133, 0.7325, -0.8611, 0.3197, -0.0146],

# [-0.5205, 1.2527, 0.9034, 0.1704, -1.1623],

# [-0.7035, 2.2432, 1.2100, -1.9930, -0.9532]])

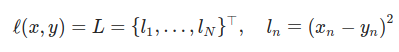

to calculate the MSE loss without reduction I getting as a result:

mse_loss = torch.nn.MSELoss(reduction="none")

loss = mse_loss(input, target)

# tensor([[4.7804e+00, 9.7749e-02, 2.3682e+00, 2.8209e+00, 5.5801e-02],

# [1.1709e-01, 2.2212e-01, 2.9780e-01, 1.0326e+00, 9.5105e-01],

# [2.1364e+00, 9.2253e-01, 8.4085e-01, 9.5541e-01, 4.2508e-03]])

But in that case, shouldn’t it be a tensor with only three values? Since the the number of elements (N) in target and prediction is equal to 3?