I think I found a mistake on Automatic differentiation package - torch.autograd — PyTorch 2.1 documentation.

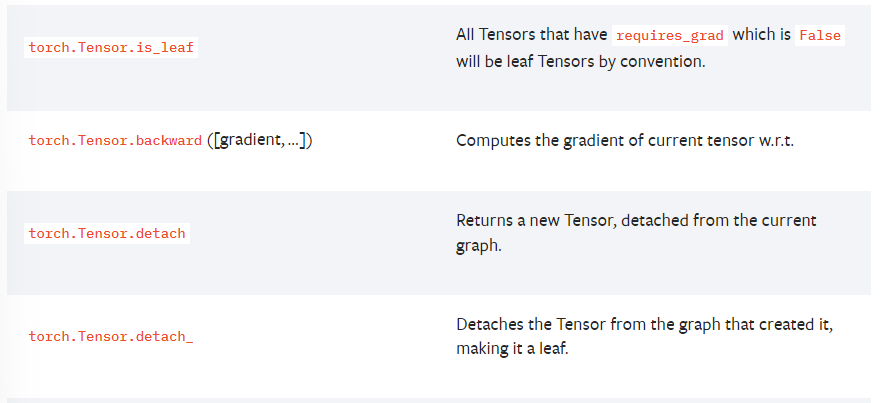

The explanations of torch.Tensor.is_leaf & torch.Tensor.detach_ imply that a Tensor with requires_grad = False is a leaf, but exactly the opposite is the case, since the gradient is calculated w.r.t. all leaves. Am I wrong?

Kind Regards

Hi Milan,

A simple explanation -

A leaf tensor is any tensor on which no operations (that autograd can track) have been performed.

When a tensor is first initialized, it becomes a leaf node/tensor.

Basically, all inputs and weights of a neural network are leaf tensors and hence leaf nodes in the computational graph.

Whenever any operation (that autograd can track - this is important) is performed on a leaf tensor, it does not remain a leaf anymore.

See:

x = torch.tensor([1.0, 2.0])

print(x.is_leaf) # True

print(x.requires_grad) # False

y = (x*2).sum()

print(y.is_leaf) # True

print(y.requires_grad) # False

Although y is the result of some operations on x, y is still a leaf as autograd isn’t tracking anything.

Not necessarily. It has less to do with the requires_grad attribute and more to do with what autograd tracks.

As you saw above, exactly the opposite is not always the case. A tensor with requires_grad = False can be a leaf.

detach simple detaches a tensor from its current computation graph and returns a new tensor which is a leaf and has its requires_grad = False.

detach_ is just that but does it in-place without returning a new tensor.

Does this answer your question?

2 Likes

Thank you for clarification!

I have a follow-up question:

why is it, that

a = torch.tensor([1., 2.], requires_grad=True)

b = 2 * a

c = a.sum()

d = (2*a).sum()

print(a.is_leaf) # True

print(b.is_leaf) # False

print(c.is_leaf) # False

print(d.is_leaf) # False

# ,but

a = torch.tensor([1., 2.], requires_grad=False)

b = 2 * a

c = a.sum()

d = (2*a).sum()

print(a.is_leaf) # True

print(b.is_leaf) # True

print(c.is_leaf) # True

print(d.is_leaf) # True

In the first snippet, since a has requires_grad=True, the autograd engine is now tracking every operation involving a.

Hence, all calculations involving b, c, d are being tracked which is why they aren’t leaves any more. See:

For the second code, a’s requires_grad=False and autograd isn’t tracking, so all b, c, d are leaves - this is conceptually exactly what the code sample in my previous reply is.

Let me know if you have further questions,

Srishti

1 Like

Thanks, you helped me a lot!