I think I’m confused between the cross-entropy loss and the implementation of nn.CrossEntropyLoss. As far as I know, the general formula for cross-entropy loss looks like this.

L(y,s) = - sigma(i=1 to c) {yi * log(si)} (where si is the output of softmax)

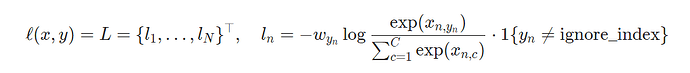

However, when I see the documentation for nn.CrossEntropyLoss, it looks like there is no ‘yi’ value (the one-hot vector indicating the target class).

Can anyone help me solve my confusion?"