Hi Everyone,

I exported a pytorch model to onnx using torch.onnx.export function().

The model has a few Conv Nodes and after exporting observed that the model weights has changed.

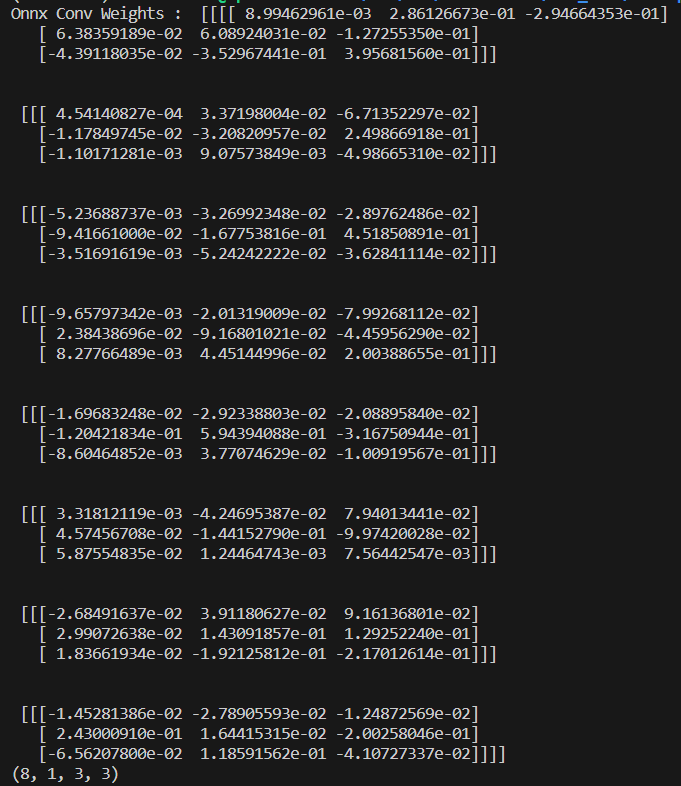

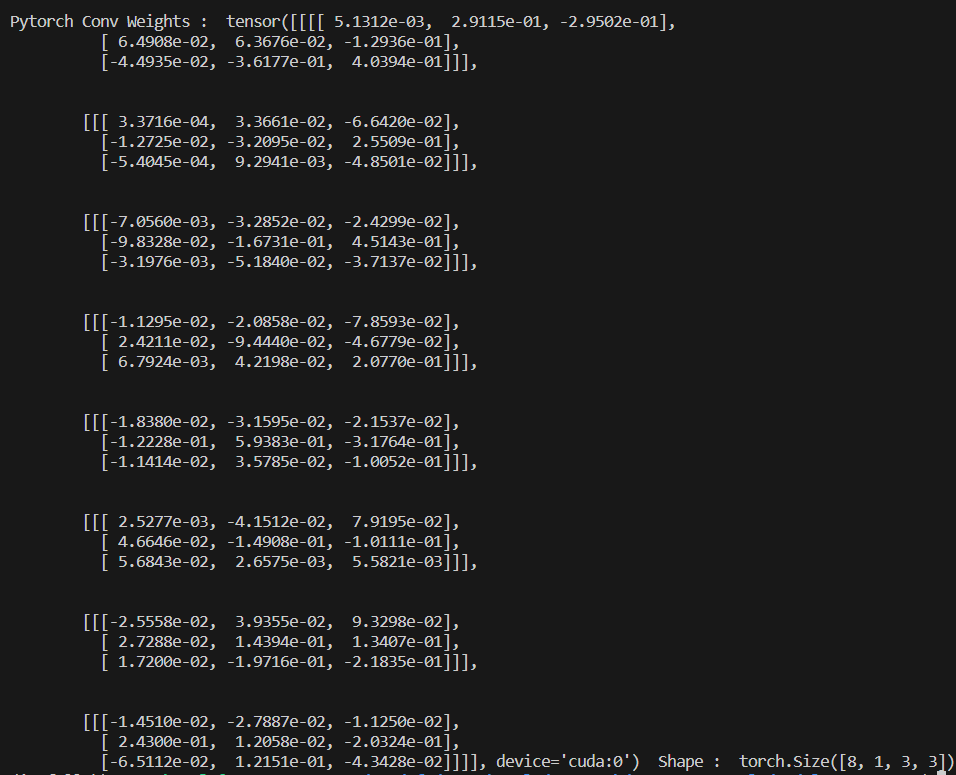

I have attached sample conv weights from both torch(1.13.0) and Onnx(1.15.0)

Observation : [0][0] of all the 8 3x3 matrix has significantly changed

If we are thinking about inference or input data/activations, I agree with the variance.

But we are looking at model weights here.

I am trying to understand what is happening under the hood of torch.onnx.export,

is there any way to keep weights without much changes,(or very minimal precision changes)? And what is the actual reason for us to have difference in the weights when we are exporting ![]() ?

?