Hi, I was working with the Conv1d layer and noticed a weird inference speed degradation comparing 2 ways of input propagation through this layer, lets say we have:

conv_1 = nn.Conv1d(in_channels=1, out_channels=20, kernel_size=(1, 300))

conv_1.weight.data.fill_(0.01)

conv_1.bias.data.fill_(0.01)

conv_2 = nn.Conv1d(in_channels=300, out_channels=20, kernel_size=1)

conv_2.weight.data.fill_(0.01)

conv_2.bias.data.fill_(0.01)

x1 = torch.FloatTensor(np.ones((10, 1, 100000, 300)))

out1 = conv_1(x1).squeeze(3)

x2 = torch.FloatTensor(np.ones((10, 300, 100000)))

out2 = conv_2(x2)

torch.allclose(out1, out2, atol=1e-6)

>>> True

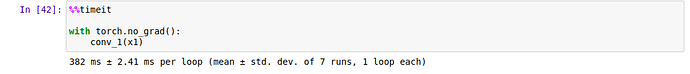

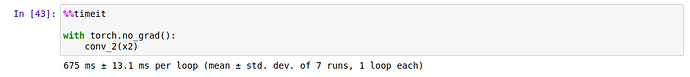

Then I tried to measure performance speed for conv_1 and conv_2 and got the following results:

Can please someone explain me this almost 2-x performance degradation and if this issue is reproducible or not?

Config:

PyTorch==1.6.0 via pip

Operating System: Ubuntu 18.04.5 LTS

Kernel: Linux 4.15.0-123-generic

CPU: product: Intel(R) Core™ i5-7200U CPU @ 2.50GHz