hi i read a paper and i want to convert neural network architecure from 3DCNN to 2DCNN

this is the picture of 3dcnn architecture:

i’m confused because there is no kernel size in conv and how to set param in pytorch PRelu

hi i read a paper and i want to convert neural network architecure from 3DCNN to 2DCNN

this is the picture of 3dcnn architecture:

i’m confused because there is no kernel size in conv and how to set param in pytorch PRelu

In this paper the dimensions are represented in (H, W, D, C) format (H - height, W - width, D - (temporal) depth, C - channels). So the input, (32, 32, 10, 1) is 10 consecutive grayscale (because C equals 1) frames of size 32x32. Since you can see how the number of channels changes at each level, you know how to define the in_channels and out_channels of nn.Conv3d or nn.Conv2d.

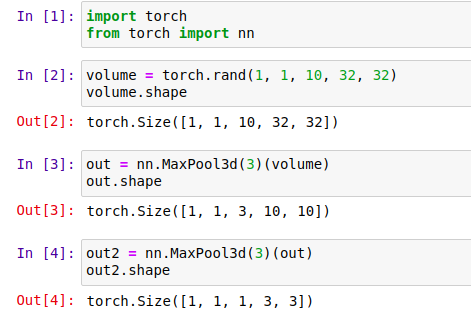

It appears that both Convolution3D layers have kernel sizes 1x1x1 since they don’t affect the inputs H, W and D size. The kernel size of MaxPooling layers is 3x3x3, which can be seen by the difference in feature sizes after they’re applied. Here’s a screenshot that can make it clearer

Do note that the dimension order convention in PyTorch is different from the one used in the paper. PyTorch uses (N, C, D, H, W) while the paper uses the TensorFlow convention (N, H, W, D, C). N stand for batch size.

nn.PReLU’s num_parameters is gonna be number of channels before it.

Basically, the first three layers of the network are

nn.Conv3d(in_channels=1, out_channels=32, kernel_size=1)

nn.PReLU(num_parameters=32)

nn.MaxPool3d(kernel_size=3)

Now you can convert to 2d everything before Dense (nn.Linear) by just replacing 3d with 2d in the name of the layer. For the first Dense layer, you’ll have to check the output shape of the previous layer in order to define it.

class Flatten(nn.Module):

def forward(self, input):

return input.view(input.size(0), -1)

class ActionNet(Module):

def __init__(self, num_class=4):

super(ActionNet, self).__init__()

self.cnn_layer = Sequential(

#conv1

Conv2d(in_channels=1, out_channels=32, kernel_size=1),

PReLU(num_parameters=32),

MaxPool2d(kernel_size=3),

#conv2

Conv2d(in_channels=32, out_channels=64, kernel_size=1),

PReLU(num_parameters=64),

MaxPool2d(kernel_size=3),

Flatten(),

Linear(576, 128),

ReLU(inplace=True),

Dropout(0.5),

Linear(128, num_class)

)

def forward(self, x):

x = self.cnn_layer(x)

return x

HI here is my network please correct me if i’m wrong @ibro45

oh and also, do i need batch normalization? @ibro45

Seems good! I would use batchnorm, just remember to set bias=False in the convolutional layer before it Any purpose to set bias=False in DenseNet torchvision?