Hi, I need help to convert CNN-LSTM model code from Keras to Pytorch.

Function of this Code

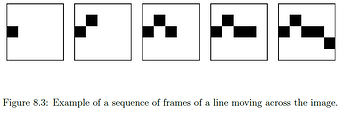

This CNN-LSTM model is used to solve moving squre video prediction problems (shown in Figure). The input is image frames. image size is (50, 50). The output is class prediction (left or right).

I want to use pytorch to reproduce this model, because i need this CNN-LSTM architecture to solve similar problem.

Here are the original model:

from random import random

from random import randint

from numpy import array

from numpy import zeros

from keras import Sequential

from matplotlib import pyplot

from keras.layers import Conv2D, MaxPooling2D, LSTM, Dense, Flatten, TimeDistributed

from keras.callbacks import TensorBoard

import tensorflow as tf

import datetime

import keras

# generate the next frame in the sequence

def next_frame(last_step, last_frame, column):

# define the scope of the next step

lower = max(0, last_step - 1)

upper = min(last_frame.shape[0] - 1, last_step + 1)

# choose the row index for the next step

step = randint(lower, upper)

# copy the prior frame

frame = last_frame.copy()

# add the new step

frame[step, column] = 1

return frame, step

# generate a sequence of frame of a dot moving across an image

def build_frames(size):

frames = list()

# create the first frame

frame = zeros((size, size))

step = randint(0, size - 1)

# decide if we are heading left or right

right = 1 if random() < 0.5 else 0

col = 0 if right else size - 1

frame[step, col] = 1

frames.append(frame)

# create all remaining frames

for i in range(1, size):

col = i if right else size - 1 - i

frame, step = next_frame(step, frame, col)

frames.append(frame)

return frames, right

# generate multiple swquences of frames and reshape for network input

def generate_examples(size, n_patterns):

X, y = list(), list()

for _ in range(n_patterns):

frames, right = build_frames(size)

X.append(frames)

y.append(right)

# resize as [samples, timesteps, width, height, channels]

X = array(X).reshape(n_patterns, size, size, size, 1)

y = array(y).reshape(n_patterns, 1)

return X, y

# generate sequence of frames

size = 6

frames, right = build_frames(size)

# plot all frames

pyplot.figure()

for i in range(size):

# create a grayscale subplot for each frame

pyplot.subplot(1, size, i + 1)

pyplot.imshow(frames[i], cmap='Greys')

# turn of the scale to make it clearer

ax = pyplot.gca()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# show the plot

pyplot.show()

# configure problem

size = 50

# define the model

model = Sequential()

model.add(TimeDistributed(Conv2D(2, (2, 2), activation='relu'),

input_shape=(None, size, size, 1)))

model.add(TimeDistributed(MaxPooling2D(pool_size=(2, 2))))

model.add(TimeDistributed(Flatten()))

model.add(LSTM(50))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['acc'])

print(model.summary())

log_dir = "logs/fit/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = keras.callbacks.TensorBoard(log_dir=log_dir, histogram_freq=1)

# fit model

X, y = generate_examples(size, 5000)

model.fit(X, y, batch_size=32, epochs=1, callbacks=[tensorboard_callback])

# evaluate model

X, y = generate_examples(size, 100)

loss, acc = model.evaluate(X, y, verbose=0)

print("loss: %f, acc: %f" % (loss, acc * 100))

# prediction on new data

X, y = generate_examples(size, 1)

yhat = model.predict_classes(X, verbose=0)

expected = "Right" if y[0] == 1 else "Left"

predicted = "Right" if yhat[0] == 1 else "Left"

print("Expected: %s, Predicted: %s" % (expected, predicted))

And this is my Pytorch model, and I am not sure am I doing right or not as I am new in Pytorch. And my model did not work. ![]() Any comment would appreciate.

Any comment would appreciate.

class TimeDistributed(nn.Module):

def __init__(self, module, batch_first=True):

# def __init__(self, module, batch_first=False):

super(TimeDistributed, self).__init__()

self.module = module

self.batch_first = batch_first

def forward(self, x):

if len(x.size()) <= 2:

return self.module(x)

# Squash samples and timesteps into a single axis

x_reshape = x.contiguous().view(-1, x.size(-1)) # (samples * timesteps, input_size)

y = self.module(x_reshape)

# We have to reshape Y

if self.batch_first:

y = y.contiguous().view(x.size(0), -1, y.size(-1)) # (samples, timesteps, output_size)

else:

y = y.view(-1, x.size(1), y.size(-1)) # (timesteps, samples, output_size)

return y

class CNN_LSTM(nn.Module):

def __init__(self):

super(CNN_LSTM, self).__init__()

self.conv = nn.Conv2d(1, 2, (2, 2))

self.relu = TimeDistributed(nn.ReLU())

self.pool = TimeDistributed(nn.MaxPool2d(2, 2))

self.flatten = TimeDistributed(nn.Flatten())

self.lstm = nn.LSTM(1, 50)

self.dense = nn.Linear(50, 1) # equivalent to Dense in keras

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x = self.relu(self.conv(x))

x = self.pool(x)

x = self.flatten(x)

x = self.lstm(x)

x = self.dense(x)

x = self.sigmoid(x)

return x

Besides the Timedistributed module i used comes from here

why i need this Timedistributed module?

Because i do not know how to build a CNN-LSTM Architecture in pytorch. I only know in keras CNN-LSTM can be achieved by wrapping the entire CNN input model (one layer or more) in a TimeDistributed layer.