I am trying to convert a keras model to pytorch for human activity recognition. The keras model could achieve up to 98% accuracy, while Pytorch model could achieve only ~60% accuracy. I couldn’t figure out the problem, first I was thinking of the padding=‘same’ of keras, but I have already adjusted the padding of pytorch already. Can you check what is wrong?

The keras code is as above

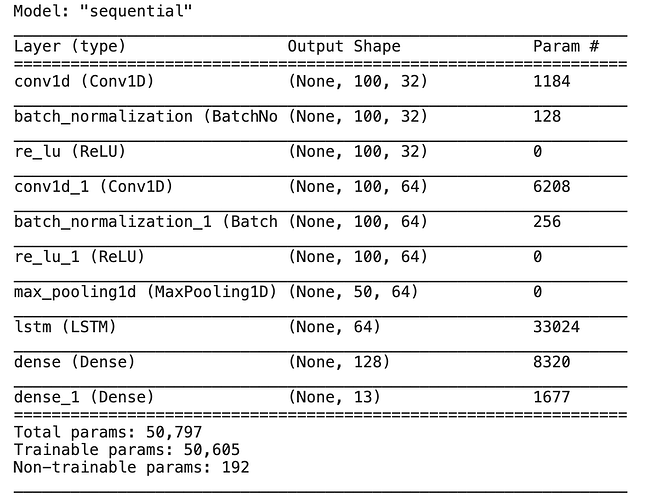

model = keras.Sequential()

model.add(layers.Input(shape=[100,12]))

model.add(layers.Conv1D(filters=32, kernel_size=3, padding="same"))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.Conv1D(filters=64, kernel_size=3, padding="same"))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.MaxPool1D(2))

model.add(layers.LSTM(64))

model.add(layers.Dense(units=128, activation='relu'))

model.add(layers.Dense(13, activation='softmax'))

model.summary()

and my pytorch model code is as below

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

seq_len = 100

# output: [, 32, 100]

self.conv1 = nn.Conv1d(seq_len, 32, kernel_size=3, stride=1, padding=1)

self.bn1 = nn.BatchNorm1d(32)

# output: [, 64, 100]

self.conv2 = nn.Conv1d(32, 64, kernel_size=3, padding=1)

self.bn2 = nn.BatchNorm1d(64)

# output: [, 64, 50]

self.mp = nn.MaxPool1d(kernel_size=2, stride=2)

# output: [, 64]

self.lstm = nn.LSTM(6, 64, 1)

# output: [, 128]

self.fc1 = nn.Linear(64, 128)

# output: [, 13]

self.fc2 = nn.Linear(128, 13)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = F.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = F.relu(x)

x = self.mp(x)

out, _ = self.lstm(x)

x = out[:, -1, :]

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

return x