Hi there

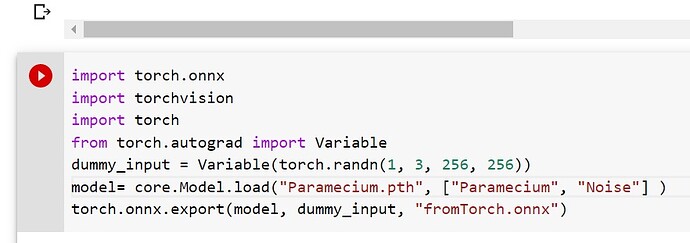

Cannot use the following code

from torch.autograd import Variable

import torch.onnx

import torch

dummy_input = Variable(torch.randn(1, 3, 256, 256))

model = torch.load(‘Paramecium.pth’, map_location=torch.device(‘cpu’))

torch.onnx.export(model, dummy_input, “fromTorch.onnx”)

to convert the pth to onnx. It throws the ‘collections.OrderedDict’ object has no attribute ‘state_dict’ at the last line execution.

The way I create the .pth file is following (Colab):

from detecto import core, utils, visualize

dataset = core.Dataset(’/content/drive/My Drive/Paramecium’)

model = core.Model([‘Paramecium’, ‘Noise’])

model.fit(dataset)

model.save(‘Paramecium.pth’)

The model looks good, works and can be visualized with visualize.show_labeled_image

How come it throws the error when converting to ONNX?

Thanks.

The model is actually the weights.!

You need to load the weights inside an existing network.

I don’t see any code where u create a network and then load the weights into it.

For example, you need to something like this.!

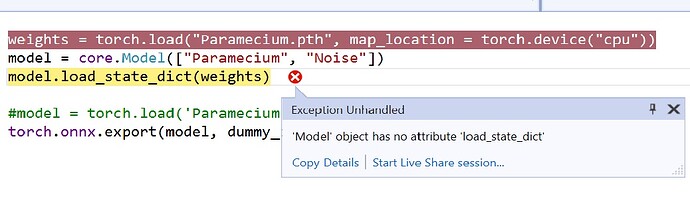

weights = torch.load("Paramecium.pth", map_location = torch.device(‘cpu’))

model = core.Model([‘Paramecium’, ‘Noise’])

model.load_state_dict(weights)

torch.onnx.export(model, dummy_input, "*.onnx")

You are actually missing the model.load_state_dict()

The Paramecium.pth just contains the weights, so you need to load these weights inside a model core.Model([‘Paramecium’, ‘Noise’])

Hm… What is core? core.Model - where it comes from?

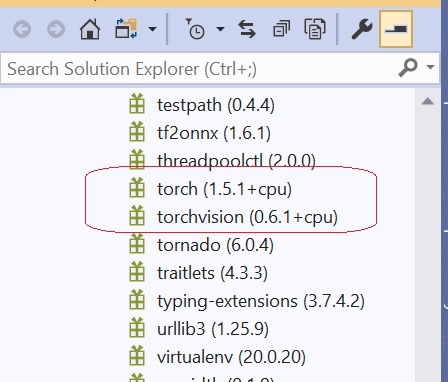

My imports are as following:

from torch.autograd import Variable

import torch.onnx

import torchvision

import torch

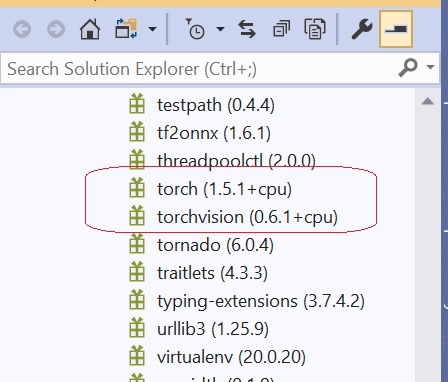

My Visual Studio Python environment is shown in the picture.

Thoughts?

Thanks.

Ooops… I found it, it is just a part of detecto.

Anyway, the line

model.load_state_dict(weights)

throws the following exception

Thoughts?

Thanks.

Try

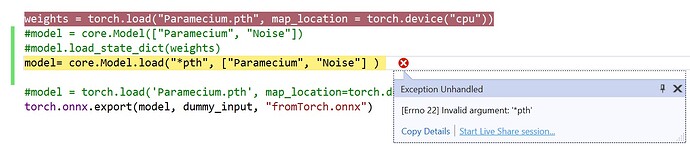

model= core.Model.load("*pth", ["Paramecium", "Noise"] ) instead of those two lines involving model

Hey, use Paramecium.pth instead of *pth

Tried it already. It brings me back to the initial problem:

It looks like I’m getting more and more involved in voodoo programming.

Let me try to get both tasks (model training and ONNX conversion) done in one place - Colab. So far once the model trained in Colab, I switch to my local environment to Visual Studio finishing the conversion.

Let’s see.

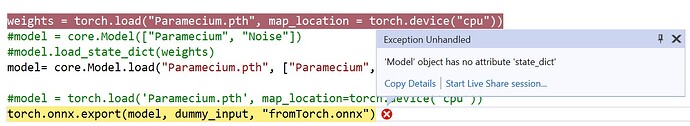

No. The Colab code below

is not working either throwing

AttributeError: ‘Model’ object has no attribute ‘state_dict’

I’m stuck. Help!

I’m not sure what is exactly wrong, but you can refer to this link and see what’s the mistake

https://detecto.readthedocs.io/en/latest/api/core.html

Hi, after

model = core.Model.load("Paramecium.pth", ["Paramecium", "Noise"])

could you try this line,

torch.onnx.export(model.get_internal_model(), dummy_input, "from_onnx.onnx") ?

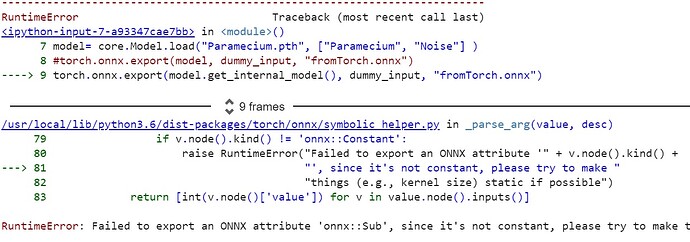

It moved somewhere ahead but throws the exception after:

RuntimeError: Failed to export an ONNX attribute ‘onnx::Sub’, since it’s not constant, please try to make things (e.g., kernel size) static if possible

Stack:

Thoughts?

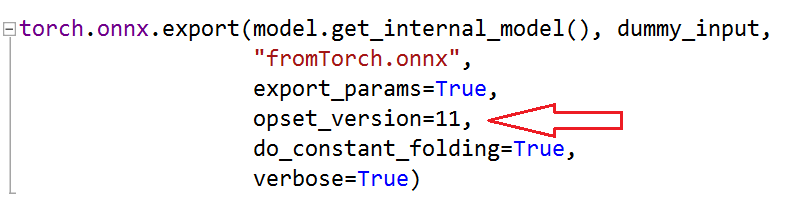

One more peace of information from the Colab debugger:

/usr/local/lib/python3.6/dist-packages/torch/onnx/symbolic_helper.py:243: UserWarning: You are trying to export the model with onnx:Upsample for ONNX opset version 9.

This operator might cause results to not match the expected results by PyTorch.

ONNX’s Upsample/Resize operator did not match Pytorch’s Interpolation until opset 11.

Attributes to determine how to transform the input were added in onnx:Resize in opset 11

to support Pytorch’s behavior (like coordinate_transformation_mode and nearest_mode).

We recommend using opset 11 and above for models using this operator.

“” + str(_export_onnx_opset_version) + ". "

How can I get the opset 11+ ?

Thanks.

It looks like I fixed it:

Thanks.