I need to apply a function from MATLAB to a tensor and have installed the MATLAB Engine API so I can use MATLAB within the Python environment. Currently, I convert the tensor to a MATLAB double, perform the calculation using the MATLAB engine, and then convert the answer back to a torch tensor. The issue that I have is that doing conversion from MATLAB double to torch tensor continues to take up memory, even when I reasign the same variable. The conversion of tensor to MATLAB double doesn’t lead to the increase in memory, it is precisely converting the MATLAB double back into a tensor which leads to this.

This could be some misunderstanding on my part of what torch.tensor or torch.as_tensor does, or something specific to doing this to a MATLAB double, however I have not been able to find information that will resolve my issue. Just creating a torch tensor or doing a conversion of a numpy array to a torch tensor does not see any memory increase. A minimal working example is

import numpy as np

import torch

import matlab.engine

import psutil

import matplotlib.pyplot as plt

matlab_eng = matlab.engine.start_matlab()

num_loops = 6

n = 2000

mem_no_conversion = np.empty(num_loops)

mem_with_conversion = np.empty(num_loops)

for ind in range(num_loops):

mem_no_conversion[ind] = psutil.virtual_memory().percent

A = torch.eye(n,n)

Amat = matlab.double(A.numpy().tolist())

del A, Amat

for ind in range(num_loops):

mem_with_conversion[ind] = psutil.virtual_memory().percent

A = torch.eye(n,n)

Amat = matlab.double(A.numpy().tolist())

Atorch = torch.as_tensor(Amat)

del A, Amat, Atorch

plt.figure()

plt.plot(mem_no_conversion,label='No conversion')

plt.plot(mem_with_conversion,label='With conversion')

plt.ylabel('Memory usage')

plt.legend()

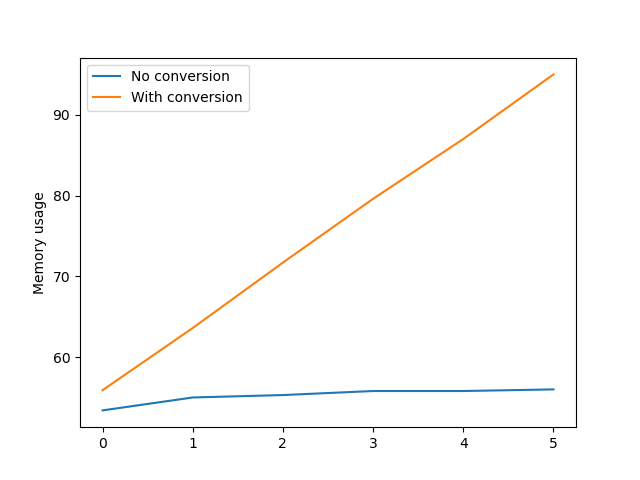

which produces the following figure (I am using 16GB of RAM).

Is there any way to do this conversion without seeing this increase in memory?