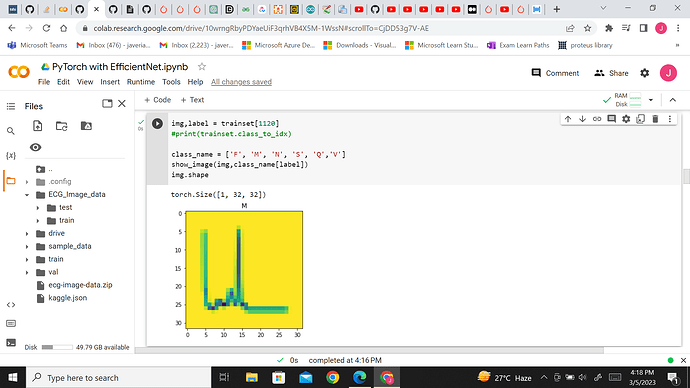

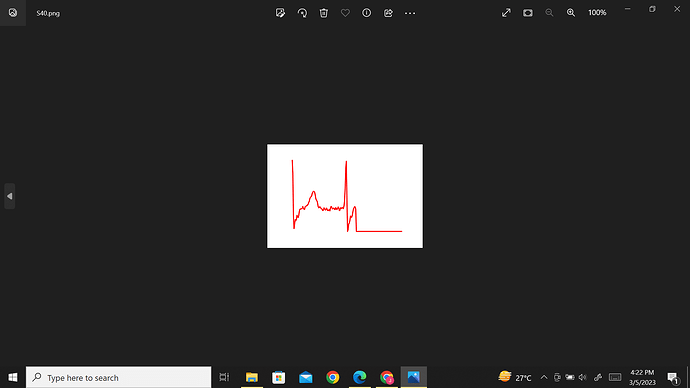

Hello. I have 3 folders with images subfolders as train, test and validate. I have used T.Grayscale(1) to convert into grayscale but when I show the image , its like this. I dont understand why? Here is the image which Im getting

my code is here:

import matplotlib.pyplot as plt

import torch.nn.functional as F

import torch

import numpy as np

def show_image(image,label):

image = image.permute(1,2,0)

plt.imshow(image)

plt.title(label)

def show_grid(image,title = None):

image = image.permute(1,2,0)

plt.figure(figsize=[15, 15])

plt.imshow(image)

if title != None:

plt.title(title)

def accuracy(y_pred,y_true):

y_pred = F.softmax(y_pred,dim = 1)

top_p,top_class = y_pred.topk(1,dim = 1)

equals = top_class == y_true.view(*top_class.shape)

return torch.mean(equals.type(torch.FloatTensor))

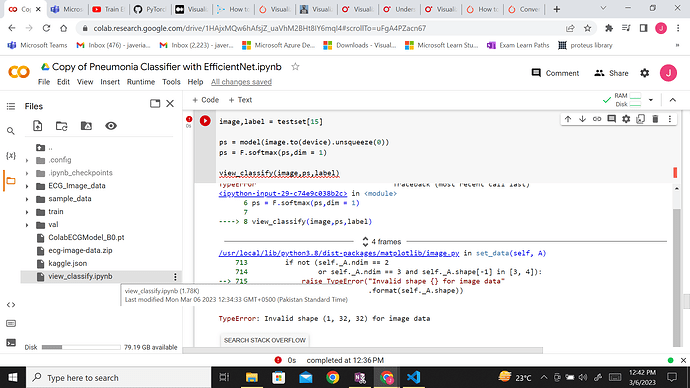

def view_classify(image,ps,label):

class_name = ['F', 'M' ,'N', 'S', 'Q','V']

classes = np.array(class_name)

ps = ps.cpu().data.numpy().squeeze()

image = image.permute(1,2,0)

fig, (ax1, ax2) = plt.subplots(figsize=(8,12), ncols=2)

ax1.imshow(img)

ax1.set_title('Ground Truth : {}'.format(class_name[label]))

ax1.axis('off')

ax2.barh(classes, ps)

ax2.set_aspect(0.1)

ax2.set_yticks(classes)

ax2.set_yticklabels(classes)

ax2.set_title('Predicted Class')

ax2.set_xlim(0, 1.1)

plt.tight_layout()

return None

train_transform = T.Compose([

T.Resize(size=(CFG.img_size,CFG.img_size)),

T.ToTensor(),

T.Grayscale(num_output_channels=1)

])

validate_transform = T.Compose([

T.Resize(size=(CFG.img_size,CFG.img_size)),

T.ToTensor(),

T.Grayscale(num_output_channels=1)

])

test_transform = T.Compose([

T.Resize(size=(CFG.img_size,CFG.img_size)), # Resizing the image to be 32 by 22

T.ToTensor(),

T.Grayscale(num_output_channels=1)

])