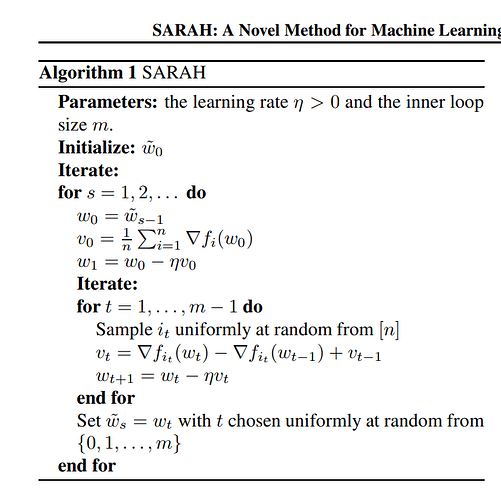

Hey, I was trying to implement sarah optimization algorithm.

And here is the code.

1) Model

Linear model f = wx + b , sigmoid at the end

class Model(nn.Module):

def init(self, n_input_features):

super(Model, self).init()

self.linear = nn.Linear(n_input_features, 1)

def forward(self, x):

y_pred = torch.sigmoid(self.linear(x))

return y_pred

model = Model(n_features)

def binary_cross_entropy_loss(outputs, targets):

epsilon = 1e-4 # small epsilon value to ensure numerical stability

loss = - (targets * torch.log(outputs + epsilon) + (1 - targets) * torch.log(1 - outputs + epsilon))

return torch.mean(loss)

2) Loss and optimizer

num_epochs = 100

learning_rate = 0.01

criterion = binary_cross_entropy_loss

optimizer = torch.optim.Adagrad(model.parameters(), lr=learning_rate)

train_losses = np.zeros(num_epochs)

3) Training loop

for epoch in range(num_epochs):

# Forward pass and loss

y_pred = model(X_train)

loss = criterion(y_pred, y_train)

old_model = copy.deepcopy(model)

optimizer.zero_grad() # Clear previous gradients

loss.backward(retain_graph=True) # Compute gradients using backward pass

# Access gradients and store them in a variable

gradients = [param.grad for param in model.parameters()]

V0 = [torch.zeros_like(p) for p in model.parameters()]

for i, w in enumerate(model.parameters()):

V0[i] += gradients[i]

w.data = w.data - V0[i] * learning_rate

m = 2*num_epochs

inside_loop = np.zeros(m)

n = len(X_train)

for t in range(0, m):

# Sample a random index uniformly at random from [n]

i_t = random.randint(0, n - 1)

# Access the sample at index i_t

sample = X_train[i_t]

target = y_train[i_t]

### One sample and it's gradient with updated model

sample_pred = model(sample)

inner_loss = criterion(sample_pred, target)

# Backward pass

optimizer.zero_grad() # Clear previous gradients

inner_loss.backward(retain_graph=True) # Compute gradients using backward pass

# Access gradients and store them in a variable

inner_gradients_wrt_updated_model = [param.grad for param in model.parameters()]

### One sample and it's gradient with old model

sample_pred_old = old_model(sample)

inner_loss_old = criterion(sample_pred, target)

# Backward pass

optimizer.zero_grad() # Clear previous gradients

inner_loss_old.backward(retain_graph=True) # Compute gradients using backward pass

# Access gradients and store them in a variable

inner_gradients_wrt_old_model = [param.grad for param in old_model.parameters()]

for c,d in enumerate(model.parameters()):

V0[c] = inner_gradients_wrt_updated_model[c] - inner_gradients_wrt_old_model[c] + V0[c]

d.data = d.data - learning_rate * V0[c]

inside_loop[t] = loss.item()

# zero grad before new step

optimizer.zero_grad()

train_losses[epoch] = loss.item()

if (epoch+1) % 10 == 0:

print(f'epoch: {epoch+1}, loss = {loss.item():.4f}')

In this code inner_gradients_wrt_old_model always is None. And I failed to solve it. If you can help me regrading this I will be grateful to you.

I really appreciate any help you can provide.

And let me know if the code match with algorithm implementation or not.