Hello,

I am building an Edge Detector Based on the Conv2d layers of the VGG16 for feature extraction, and I wanted to ask how to correctly load part of the model architecture, say I want only the convolution layers block and do not want the fully connected layers at the end?

Some background:

I am building an Edge Detection model, in which I want to use the convolution layers of the VGG16 for feature extraction, then pass it through the decoder consisting of two ConvTranspose2d layers and finally upsampling to bring it to correct dimensions as the target edge mask.

I am currently using this architecture:

vgg16 = models.vgg16(pretrained=False)

features = list(vgg16.features.children())[:-1] # Remove the last max-pooling layer

vgg16_no_maxpool = torch.nn.Sequential(*features)

decoder = torch.nn.Sequential(

torch.nn.ConvTranspose2d(512, 256, kernel_size=3, stride=2, padding=1, output_padding=1),

torch.nn.ReLU(),

torch.nn.ConvTranspose2d(256, 128, kernel_size=3, stride=2, padding=1, output_padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(128, 1, kernel_size=1), # Directly output after 128 channels

torch.nn.Sigmoid()

)

#Upsample to match input size (224x224)

upsampler = torch.nn.Upsample(size=(224, 224), mode='bilinear', align_corners=True)

class VGG16EdgeDetector(pl.LightningModule):

def __init__(self, learning_rate=0.001):

super(VGG16EdgeDetector, self).__init__()

self.learning_rate = learning_rate

# Define the layers of the CNN

self.vgg16_no_maxpool = vgg16_no_maxpool

# Define the decoder

self.decoder = decoder

# Define the upsampling layer

self.upsampler = upsampler

# Define the loss function

self.loss_fn = class_balanced_loss

self.save_hyperparameters()

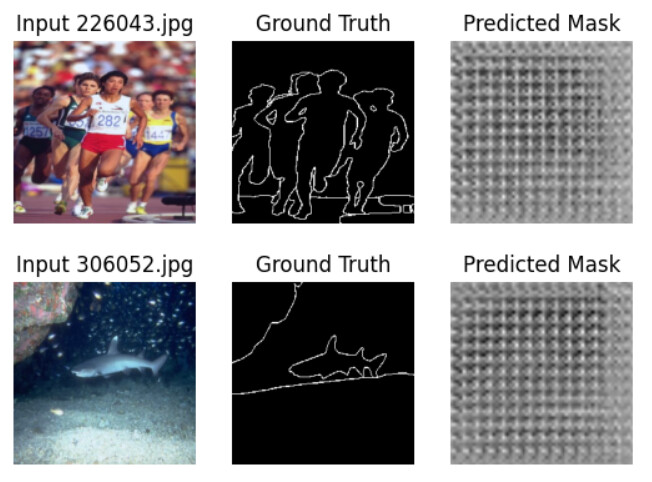

However, upon using this, I am getting a checkered-esque output prediction mask:

Here I am loading the image and ground truth edge mask from test dataloader (using Lightning Pytorch), so I believe the dataloading has been done correctly.

Thanks.