I try to made multilabel classification using lstm arch and pytroch lib. But unfortunately it does’t train.

BATCH_SIZE=1

INPUT_LENGTH=5

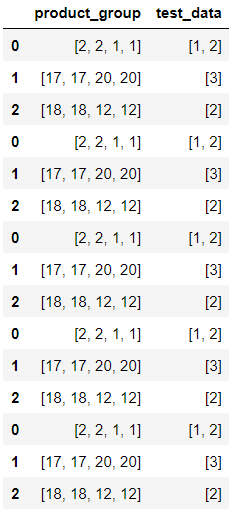

#data generation

df=pd.DataFrame({"product_group":[INPUT_LENGTH//2*[random.randint(1,20)]+INPUT_LENGTH//2*

[random.randint(1,20)] for _ in range(3)],"test_data":[[1,2],[3],[2]]})

target_vector=np.array([1,2,3])

output_dim=3

# make it more

df=pd.concat([df,df,df,df,df])

train, test = train_test_split(df, test_size=0.2, random_state=0)

class CustomDataset(Dataset):

def __init__(self, ddf):

self.ddf=ddf

def __len__(self):

return self.ddf.shape[0]

def __getitem__(self, idx):

data=self.ddf.iloc[idx]

# get index of target value

list_target_elements=[]

for val in data.test_data:

list_target_elements.append(target_vector.tolist().index(val))

#creating empty tensor

target=torch.zeros(len(target_vector))

for index in list_target_elements:

target[index]=1

return torch.tensor(data.product_group),target

dataset_train=CustomDataset(train)

dataset_test=CustomDataset(test)

train_dataloader = DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True,drop_last=False)

test_dataloader = DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=True,drop_last=False)

class LSTMModel(nn.Module):

def __init__(self, input_dim, hidden_dim, layer_dim, output_dim, dropout_prob):

super(LSTMModel, self).__init__()

self.embeding= nn.Embedding(21, 50)

self.hidden_dim = hidden_dim

self.layer_dim = layer_dim

self.lstm = nn.LSTM(

input_dim, hidden_dim, layer_dim, batch_first=True, dropout=dropout_prob

)

self.fc1 = nn.Linear(hidden_dim, output_dim)

self.sigmoid=torch.nn.Sigmoid()

def forward(self, x):

h0 = torch.zeros(self.layer_dim, x.size(0), self.hidden_dim)

c0 = torch.zeros(self.layer_dim, x.size(0), self.hidden_dim)

x=self.embeding(x)

out, (hn, cn) = self.lstm(x, (h0, c0))

out = out[:, -1, :]

out = self.fc1(out)

out=self.sigmoid(out)

return out

model =LSTMModel(input_dim=50,hidden_dim=10,layer_dim=20,output_dim=output_dim,dropout_prob=0.2)

x,y=(next(iter(train_dataloader)))

after i got: x:tensor([[17, 17, 20, 20]]) y:tensor([[0., 0., 1.]])

model(x) shows tensor([[0.4539, 0.4767, 0.4881]], grad_fn=SigmoidBackward0)

def train_model(model, loss, optimizer,scheduler, num_epochs):

precision_stats = {

'train': [],

"val": []

}

recall_stats = {

'train': [],

"val": []

}

loss_stats = {

'train': [],

"val": []

}

for e in tqdm(range(1, num_epochs+1)):

# TRAINING

train_epoch_loss = 0

train_epoch_recall = 0

train_epoch_precision = 0

model.train()

for x, y in tqdm(train_dataloader):

x = x.to(device)

y = y.to(device)

optimizer.zero_grad()

y_train_pred = model(x)

train_loss = loss(y_train_pred, y)

train_loss.backward()

optimizer.step()

scheduler.step()

pred = np.array(y_train_pred > 0.5, dtype=float)

train_recall = recall_score(y_true=y, y_pred=pred,average='micro',zero_division=0)

train_precision = precision_score(y_true=y, y_pred=pred,average='micro',zero_division=0)

train_epoch_loss += train_loss.item()

train_epoch_recall += train_recall.item()

train_epoch_precision += train_precision.item()

loss_stats['train'].append(train_epoch_loss/len(train_dataloader))

recall_stats['train'].append(train_epoch_recall/len(train_dataloader))

precision_stats['train'].append(train_epoch_precision/len(train_dataloader))

print(f'Epoch {e+0:03}: | Loss: {train_epoch_loss/len(train_dataloader):.5f} | recall: {train_epoch_recall/len(train_dataloader):.3f} | precision:{train_epoch_precision/len(train_dataloader):.3f}')

And finally launch train cycle:

lr=0.01

epoch=200

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = model.to(device)

loss = torch.nn.BCELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1)

train_model(model,loss,optimizer,scheduler,epoch)

this code work without errors, but model doesn’t train