Hello,

I’m trying to include in my loss function the cosine similarity between the embeddings of the words of the sentences, so the distance between words will be less and my model can predict similar words.

but usually a loss fonction gives as result just one value, and with cosine similarity I have as many results as words in the sentence. I tried to mutliply the cosine similarity result by -1 to obtain not the maximum similarity but the minimum and then I got the mean of the values of the sentence. But this gives me a non sense word result, so actually the model still dont get how to find a close embedding.

Hope I was clear, I would appreciate any comments or ideas.

Ingrid.

Hi,

Can you provide an example?

sure !

this is part of the code in my train function

for i in range(sentence_prime.size()[0]):

embedding_prime.append(weights_matrix2[int(sentence_prime[i])])

embedding_target.append(weights_matrix2[int(word_targets[i])])

loss2 = loss(torch.stack(embedding_prime),torch.stack(embedding_target))

total_loss = loss + loss2

total_loss.backward()

optimizer.step()

taking into account that

loss = nn.CosineSimilarity()

Hi, Please try this and let me know if it works:

Instead of multiplying the values by -1, calculate 1-cosine similarity which gives maximum similarity and then calculate mean.

Hi !

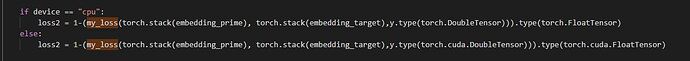

So I tried this;

loss2 = 1-(my_loss(torch.stack(embedding_prime),torch.stack(embedding_target)))

and still loss = nn.CosineSimilarity()

but the result is still so innacurate… As a previous test we used the NLLLoss() between the entry phrase and the generated phrase so we obtained the same phrases with a 95% accuracy. but what we actually need is to obtain a sentence with words the closer possible .

Do you have any idea what I may be missing ?

Thanks.

Hi, it looks as though you should be using nn.CosineEmbeddingLoss() not nn.CosineSimilarity(). Have you tried this?

Edit: Actually I now understand that you’re trying to compute the cosine similarity of a sequence of word embeddings with another sequence of word embeddings. I believe the above suggestion of taking the mean could be useful.

loss2 = 1-(my_loss(torch.mean(torch.stack(embedding_prime), 0), torch.mean(torch.stack(embedding_target), 0))).

To break this down a bit, you’ve got n-dimensional word embeddings, and the sentence is length T. That means once you append the embeddings to your list and call torch.stack(), you have a [T, n] shape matrix. Calling torch.mean(matrix, 0) will average across the T timesteps, resulting in one single embedding vector of shape [n]. Here you could use nn.CosineEmbeddingLoss() I believe

Thanks !

Now I’m executing the train of the model with the recommendations you made, so now my loss is CosineEmbeddingLoss() and when I use it, actually I had to change it a bit…

first I had to add a parameter “y”, which I’m not sure what is it for, but apparently its mandatory, so I sent

y = torch.empty(len(sentence)).bernoulli_().mul_(2).sub_(1)

which gives me a tensor of 1 et -1 …

and second I had to remove the mean, as long as I see it as a way to reduce the matrix, the shape of the function seem to be; the two input tensors of same size and the additional parameter “y” one dimension smaller, so my “y” tensor is already dimension 1 (it’s the size of the sentence T) and the two input tensors x1 and x2 are, as you said, size (T , embedding(500)). If I reduce my input vectors with a mean I would have a tensor of dimension 1, with size 500, and the expected “y” needs then to be smaller.

Hope it was clear, I will let you know the results when I have them.

I already obtained the results and they actually don’t change at all, it’s like this loss is not affecting the evaluation of the words, or as if the evaluation accepts the fact that the words are already different but it doesn’t try to close them. I don’t perceive the closeness of the entry word and the predicted one. They are just as far as if we haven’t trained the model yet.

Hi @Ingrid_Bernal

Maybe this reply is a bit late, but I think there is a problem when using a randomly generated y=torch.bernoulli() vector. According to the nn.CosineEmbeddingLoss documentation, y vector is used to define which version of the loss function will be used.

Based on the previous comments, as you are trying to minimize the difference measured as the angle between both input1 and input2 latent representations, you should fix y as:

y = torch.ones(x1.shape[0], device=torch.device("cuda"))

Where y equals the target tensor expected by torch.CosineEmbeddingLoss as in torch.cosine_embedding_loss(input1, input2, target, margin, reduction_enum)

By having a random y=target vector you had conflicting (opposing) loss criteria.

I hope this may help someone else in the future.

Cheers,

Jose

1 Like