I am trying to quantize my Alexnet model imported from torchvision.I am not able to get an inference from the quantized model.

I used the following code to quantize the model:

model_int8 = torch.quantization.QuantWrapper(model_fp32)

model_int8.qconfig = torch.quantization.default_qconfig

torch.quantization.prepare(model_int8, inplace=True)

with torch.no_grad():

model_int8(input_data)

torch.quantization.convert(model_int8, inplace=True)

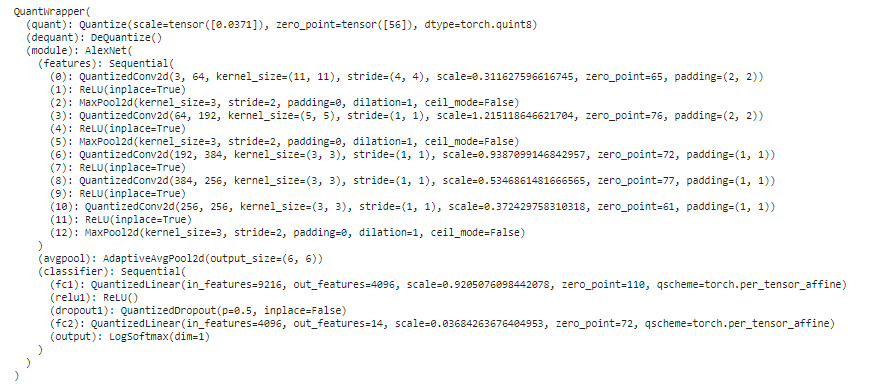

This is the model after quantization and the weights are of type qint8.I am running the whole process in the cpu.

When I try to get the inference i get the following error:

Could not run ‘aten::_log_softmax.out’ with arguments from the ‘QuantizedCPU’ backend. This could be because the operator doesn’t exist for this backend, or was omitted during the selective/custom build process (if using custom build). If you are a Facebook employee using PyTorch on mobile, please visit Internal Login for possible resolutions. ‘aten::_log_softmax.out’ is only available for these backends: [CPU, CUDA, Meta, BackendSelect, Python, FuncTorchDynamicLayerBackMode, Functionalize, Named, Conjugate, Negative, ZeroTensor, ADInplaceOrView, AutogradOther, AutogradCPU, AutogradCUDA, AutogradHIP, AutogradXLA, AutogradMPS, AutogradIPU, AutogradXPU, AutogradHPU, AutogradVE, AutogradLazy, AutogradMeta, AutogradMTIA, AutogradPrivateUse1, AutogradPrivateUse2, AutogradPrivateUse3, AutogradNestedTensor, Tracer, AutocastCPU, AutocastCUDA, FuncTorchBatched, FuncTorchVmapMode, Batched, VmapMode, FuncTorchGradWrapper, PythonTLSSnapshot, FuncTorchDynamicLayerFrontMode, PythonDispatcher].