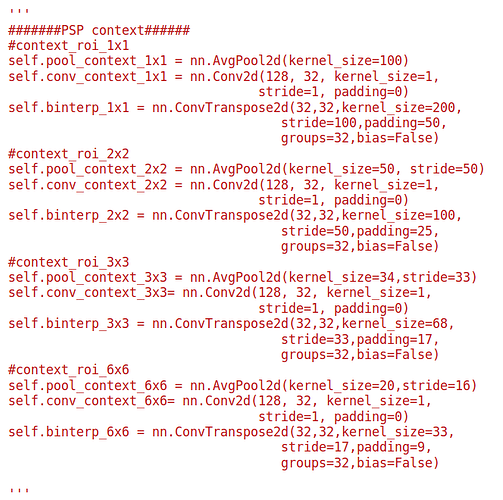

I was trying to implement PSP module as proposed by Pyramid Scene Parsing Nertwork . I used AvgPool2d, Conv2d, ReLU and ConvTransposed2d.

Attached image please find my initialization of the module.

When I started training , I found my CPU memory usage increase by about 1MB every 10 iterations, which is caused by the code block as shown above. I cannot figure out what’s causing the leakage, but I’m sure that it has nothing to do with the Con2d and ReLU. So the possible cause may be AvgPool2d ad ConvTransposed 2d.

Some info:

pyTorch: 0.3.0 post4

ubuntu: 14.02

CUDA: 8.0

CPU: 24GB RAM

GPU: Nvidia Titan Xp