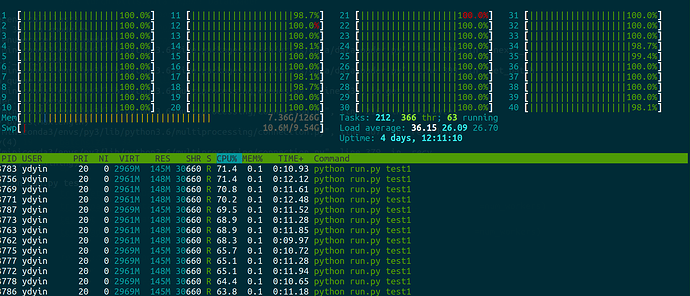

Here are my code and cpu consumption

class ClassDataset(Dataset):

"""Dataset for classification"""

def __init__(self, datapath, istrain, transform=None):

self.istrain = istrain

self.datalist = glob.glob(os.path.join(datapath, '*.png'))

self.datalen = len(self.datalist)

def __len__(self):

if self.istrain:

return 15000

else:

return 4000

def __getitem__(self, index):

idx = index % self.datalen

img1 = cv2.imread(self.datalist[idx])

img1 = img1.astype(np.float32)

# img1 = torch.from_numpy(img1)

# img1 = img1.type(torch.float32)

# img1 = img1.numpy()

img1 = cv2.resize(img1, (224, 224))

return img1

root = somewhere

datapath = os.path.join(root, somewhere)

torch.set_num_threads(1)

batchsize = 32

num_workers = 2

epoch = 300

trainset = ClassDataset(datapath, istrain=1, transform=None)

trainloader = DataLoader(trainset, batch_size=batchsize, shuffle=True, num_workers=num_workers)

testset = ClassDataset(datapath, istrain=0, transform=None)

testloader = DataLoader(testset, batch_size=batchsize, shuffle=False, num_workers=num_workers)

for e in range(epoch):

for i, batch in enumerate(trainloader):

print('train', i)

What I found is that when I comment img1 = img1.astype(np.float32), everything goes well.

I’ve tried to first convert ndarray to tensor and change the data type and convert it back (just like the three lines I’ve commented), but resulted in the same problem.

Note that there are many threads running (showed in the picture, about 25-30). Someone uses torch.set_num_threads(1) to restrict #threads, but it doesn’t work for me.

Thanks a lot!