the state of the art pytorch model should have the following capabilities:

- all of its layers and operations should be compatible with the native mobile devices (

Android and iOS)

- for Android:

pytorch -> ONNX -> TensorFlow -> tflite

- for iOS:

pytorch -> coremltools -> coreml

- model should able to use hardware acceleration on mobile devices (if the mobile supports)

Any help will be very much appreciated

Also, I’ve 2 months’ time frame for this. So, I’ll keep posting my progress here itself (just in case, if it can be of help to anyone).

I have created a simple neural net (for image classification on CIFAR dataset, given on pytorch official docs 60 mins. blitz section). Now, after doing the following conversion:

pytorch -> ONNX -> TensorFlow -> tflite

I’m using following code to convert .pb -> .tflite:

converter = tf.compat.v1.lite.TFLiteConverter.from_frozen_graph(

graph_def_file="../models/my_cifar_net.pb", input_arrays=["my_input"], output_arrays=["my_output"])

converter.optimizations = [tf.lite.Optimize.DEFAULT]

tf_lite_model = converter.convert()

with tf.io.gfile.GFile('../models/my_cifar_net.tflite', 'wb') as f:

f.write(tf_lite_model)

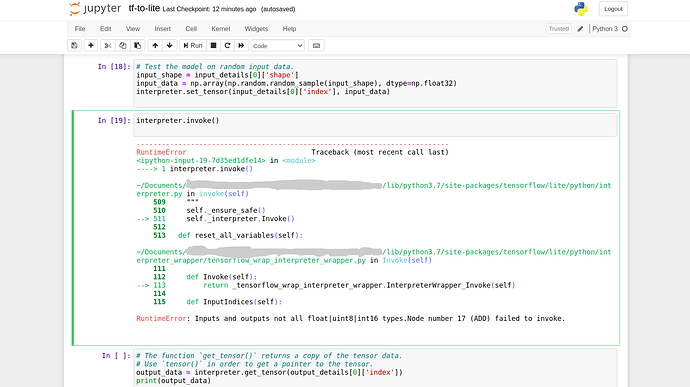

All other converted models (like, .pth, .onnx, .pb) are giving correct output on an example data except .tflite, as I’m getting the following error:

And, I’m using following versions of diff. libraries:

- numpy==1.18.2

- pandas==1.0.3

- torch==1.5.1

- torchvision==0.6.1

- onnx==1.7.0

- coremltools==4.0b1

- onnxmltools==1.7.0

- onnx-tf==1.6.0

- tensorflow==2.2.0

- tensorflow-addons==0.10.0