I am trying to implement direct_conv2d in a custom way using C++ and test it with a pytorch timm model. I have implemented the algorithm but when I try to convert it into shared library (.so) I got some errors. Here is the CMakeLists.txt file that I use to create shared library:

cmake_minimum_required(VERSION 3.5 FATAL_ERROR)

project(custom_direct_conv2d_op LANGUAGES CXX)

# Set C++ standard to 14

set(CMAKE_CXX_STANDARD 14)

set(Torch_DIR "/users/ayavuzyasar/custom_dnns_operators/pysdk/lib/python3.8/site-packages/torch/share/cmake/Torch")

set(USE_CAFFE2 OFF)

find_package(Torch REQUIRED)

add_library(custom_direct_conv2d_op MODULE direct_conv2d.cpp)

# Link against LibTorch

target_link_libraries(custom_direct_conv2d_op "${TORCH_LIBRARIES}")

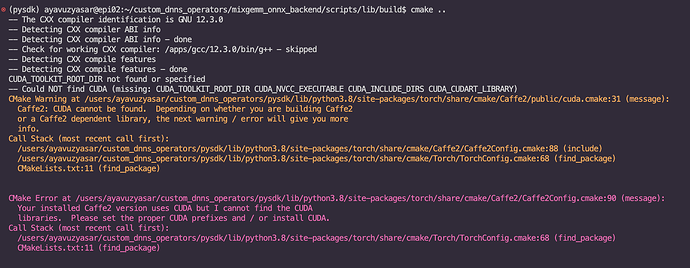

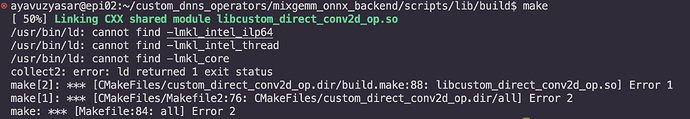

When I try to create a shared library with cmake and make command I got this output:

If you can help me, I would be grateful.

Thanks in advance.