Hey,

Until now I used Binary Cross entropy loss but since I need to use some other loss function I need to change my output so that it conforms to the Cross Entropy format. The result should be exactly the same, right?

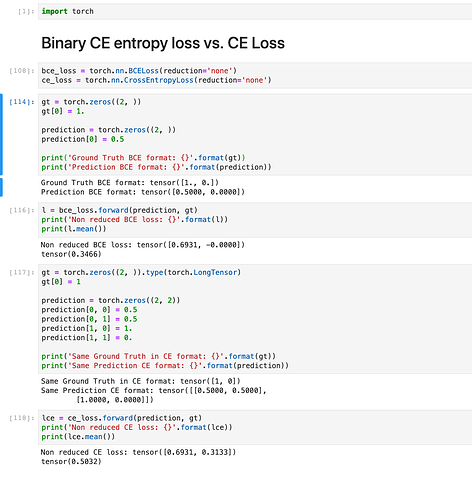

When I tried a fake / handcrafted example I do not get the same results for both of the loss functions, probably I am just overseeing something …

Suppose in binary format my ground truth is [1., 0.] and my prediction [0.5, 0], then in normal CE format my ground truth should be the same (but with integers since those are now class indices, so [1 0]). My prediction however should be for the first element: [0.5, 0.5] and for the second element [1.0, 0.0] right?

Below you can find it in a jupyter notebook.

Cheers,

Lucas