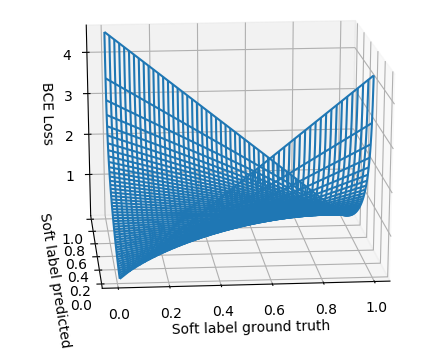

Note that cross-entropy for non 0/1 labels is not symmetric, which could be an explanation for the poor performance.

E.g., consider the scenario for the binary cross entropy:

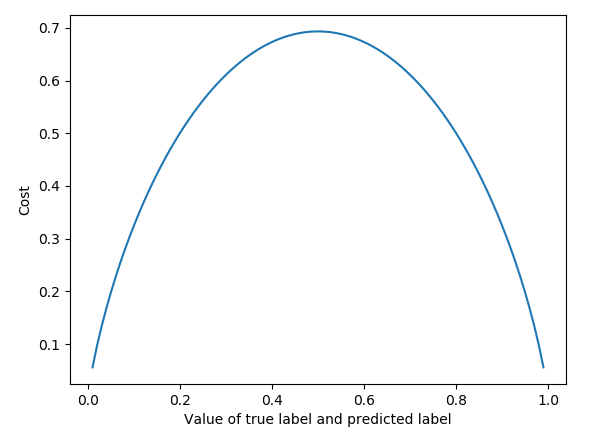

Or consider the following, where the ground truth and the predicted labels are shown on the x axis. I.e., you can see that if both are 0, the cost is zero. However, if both are 0.5, the cost is almost 0.7, although prediction=true label