Hi, I am working on building a question and answering model using T5(Huggingface) with PyTorch Lightning Module and I am checking my loss and PyTorch Lightning Loss is not being matched.

class UQAFineTuneModel(pl.LightningModule):

def __init__(self):

super().__init__()

self.model = T5ForConditionalGeneration.from_pretrained(

"allenai/unifiedqa-t5-small", return_dict=True

)

self.model.train()

def forward(

self,

source_text_input_ids,

source_text_attention_mask,

target_text_input_ids=None,

):

output = self.model(

input_ids=source_text_input_ids,

attention_mask=source_text_attention_mask,

labels=target_text_input_ids,

)

return output.loss, output.logits

def training_step(self, batch, batch_idx):

source_text_input_ids = batch["source_text_input_ids"]

source_text_attention_mask = batch["source_text_attention_mask"]

target_text_input_ids = batch["target_text_input_ids"]

# labels_attention_mask = batch["target_text_attention_mask"]

loss, outputs = self(

source_text_input_ids, source_text_attention_mask, target_text_input_ids

)

loss_mine = None

output = self.model(

input_ids=source_text_input_ids,

attention_mask=source_text_attention_mask,

labels=target_text_input_ids,

)

labels = batch["target_text_input_ids"].clone()

labels[labels == 0] = -100

if target_text_input_ids is not None:

loss_fct = CrossEntropyLoss(ignore_index=-100)

loss_mine = loss_fct(output.logits.view(-1, outputs.size(-1)), labels.view(-1))

print(f"loss_Hugginface: {loss.item()}, loss_mine : {loss_mine.item()}")

self.log("train_loss", loss, prog_bar=True, logger=True)

return {"loss": loss, "predictions": outputs}

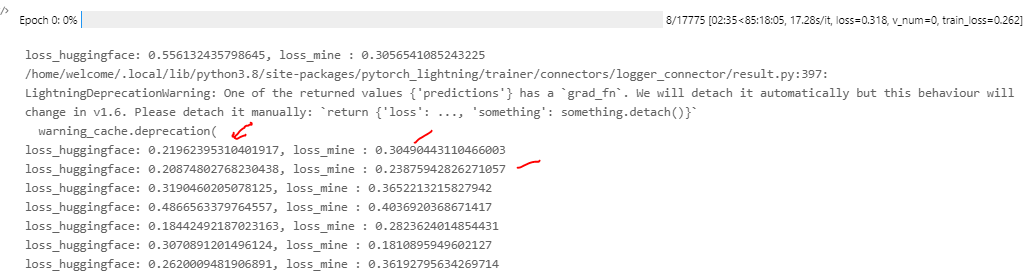

You can see the above image, why loss is not same, help is very much needed, I asked the same question on HuggingFace but they told me to ask here, you can view that discussion here.