Hi everyone!

Could you help me with the Cross Entropy Loss in PyTorch?

I was looking here on the forum if someone had already made a post with the same question as mine but I didn’t find it, that’s why I’m posting here about Cross Entropy Loss, although there are already many topics about it.

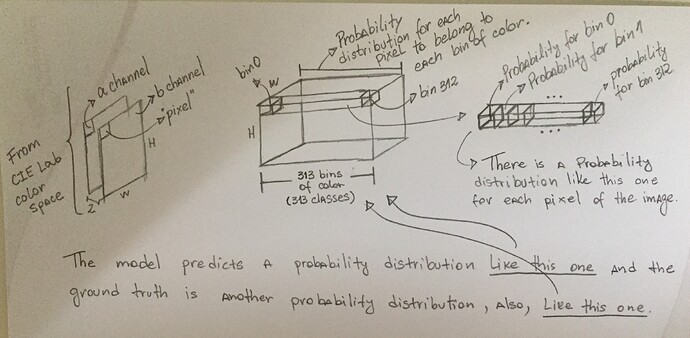

My problem is the following: first the images I’m working with are in the CIE Lab color space. So my CNN accepts the L channel of the image as input and at the end, the CNN’s output is a tensor with the same dimensions as the image of the L channel, however, with 313 layers (313, w, h). For each pixel on the L channel, there will be 313 layers. The target is generated from channels a and b of the image. A probability distribution is also generated for each pair (a, b) of each pixel, as shown in the attached figure.

So I would use Cross Entropy Loss to calculate the loss between these two probability distributions. In this case, each of the 313 color bins, as shown in the image, would be a class that would have a probability for each pixel.

I’ve seen in other posts that you can’t use a probability distribution on the target for Cross Entropy Loss in PyTorch. So how would be this error is calculated? Because the error must be calculated for each pixel in the image and each pixel has its probability distribution. How the target would be represented?

Would be the target a single matrix in which each position of the matrix would have the number of the color bin(the class) with highest value or probability for each pixel in the image? How will Cross Entropy Loss compare a probability distribution with a color bin value that represents a class? The Cross Entropy Loss accepts as input a 3D tensor and perform the loss for a probability distribution for each pixel?

I tried to represent a little of what I wrote in the drawing to try to facilitate understanding.

Thanks for your time and help.

Best regards,

Matheus Santos.