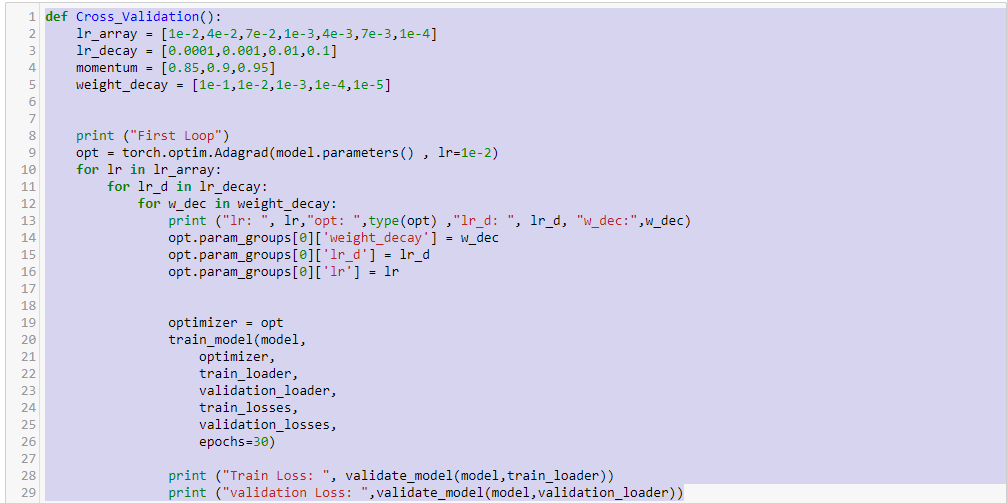

im trying out different Hyper-parameters for my model and for that i need to cross-validate over them.

it looks some thing like this:

the issue is that after each variation of Hyper-Parameter, reset is required for the model, otherwise the ‘‘learning’’ will continue where the last one stopped (need to re-initialize the weights after each itiration).

im having problem doing that, help would be much appriciated.

2 Likes

If you are already using a weight init function, you could just call it again to re-initialize the parameters.

Alternatively, you could create a completely new instance of your model. Using this you would have to re-create the optimizer as well.

Another way would be iterate your layers holding parameters and call .reset_parameters() on them.

2 Likes

thanks for the quick reply,

im kind of new to the Pytorch framework, still trying to get a grasp on it.

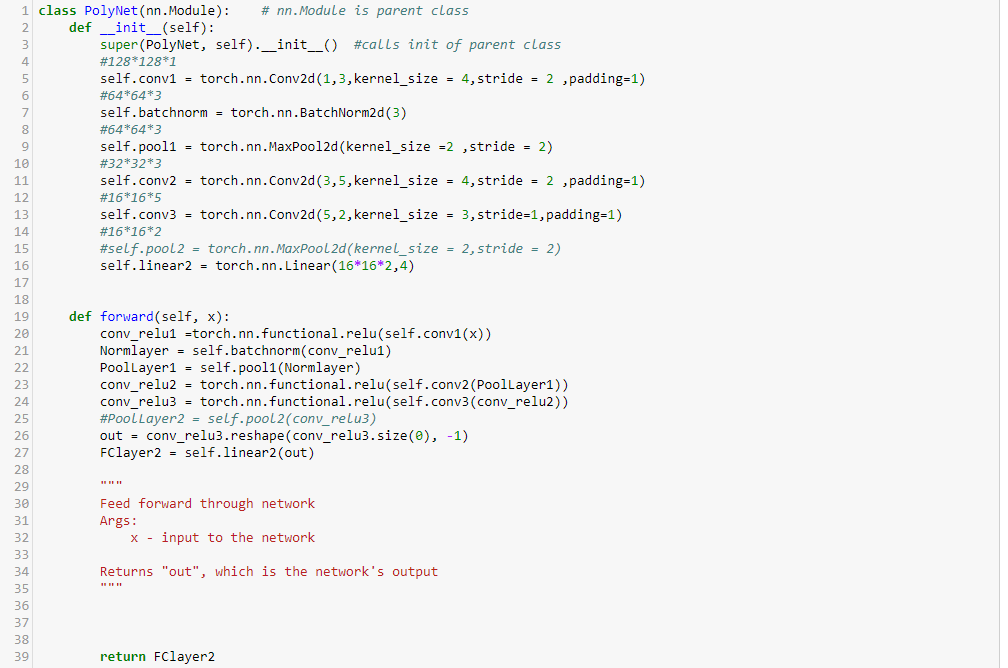

thats the model class:

how could i implement the first option you offered, i think it would be the better approach out of 3.

You can define a function to initialize the weights based on the layer and just apply your model on it:

def weights_init(m):

if isinstance(m, nn.Conv2d):

torch.nn.init.xavier_uniform(m.weight.data, nn.init.calculate_gain('relu'))

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

...

model.apply(weights_init)

You can find the different init functions here.

2 Likes

unfortunately im still having problem to implement that

(not sure what initialization i need to use and how to use the init class)

is it possible some how to “save” the weights before the cross validation and

set the “saved” weights after each itiration ?

That’s also a good idea. You can save the state_dict and reload it after your experiment. Have a look at the Serialization Semantics.

You use the weights_init function just by calling model.apply(weights_init). This will use every submodule to call the function.