Hello everyone:

sorry to disturb us. When I used crossattention, I found all of scores are 1. To be honest, I doubt the crossattention layer I constructed was wrong. Here are coding and scores and models. Could you please give me some advice to address these problem?

Thanks

best wishes

class DotProductAttention(nn.Module):

def init(self, key_size, num_hiddens, dropout, **kwargs):

super(DotProductAttention, self).init(**kwargs)

self.key_size = key_size

self.num_hiddens = num_hiddens

self.dropout = dropout

self.dropout = nn.Dropout(dropout)

self.W_k = nn.Linear(key_size, num_hiddens, bias=False)

def forward(self, queries, keys, values):

d = queries.shape[-1]

queries = self.W_k(queries)

# Set `transpose_b=True` to swap the last two dimensions of `keys`

scores = torch.bmm(queries, keys.transpose(1, 2)) / math.sqrt(d)

scores = nn.functional.softmax(scores, dim=-1)

print('scores', scores.shape, scores)

return torch.bmm(self.dropout(scores), values)

if name == ‘main’:

X = torch.normal(0, 1, (14, 1, 384))

Y = torch.normal(0, 1, (14, 1, 1024))

Y = torch.normal(0, 1, (14, 1, 1024))

print('X',X)

print('Y',Y)

print('Y',Y)

#hyparameters #t_a_a

key_size = 384

num_hiddens = 1024

dropout = 0.5

model = DotProductAttention(key_size, num_hiddens, dropout)

print(model)

output = model(X,Y,Y)

print('output shape: ', output.shape)

models summary and scores

DotProductAttention(

(dropout): Dropout(p=0.5, inplace=False)

(W_k): Linear(in_features=384, out_features=1024, bias=False)

)

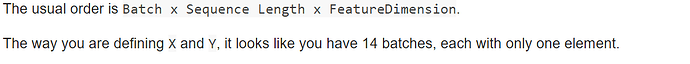

scores torch.Size([14, 1, 1]) tensor([[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]]], grad_fn=)

output shape: torch.Size([14, 1, 1024])