Hello ,

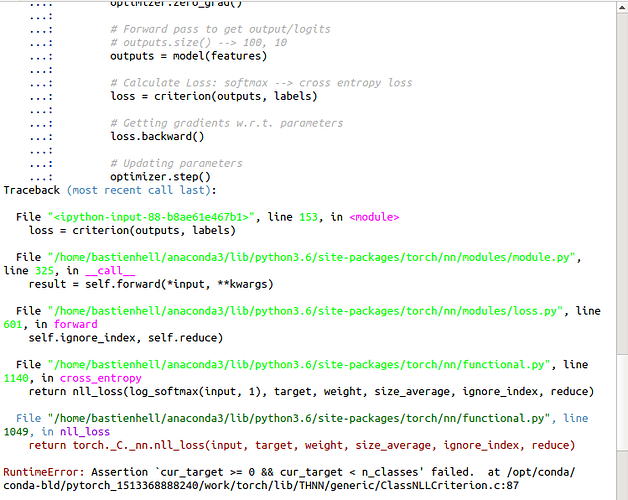

I’m training my data set using an RNN , i got this error :

from __future__ import print_function

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from torch.autograd import Variable

import numpy as np

from Data import Data

'''

STEP 1: LOADING DATASET

'''

features_train, labels_train = Data('s2-gap-12dates.csv', train=True)

features_test, labels_test = Data('s2-gap-12dates.csv', train=False)

features_train = features_train.transpose(0,2,1)

features_test = features_test.transpose(0,2,1)

class train_dataset(Dataset):

def __init__(self):

self.len = features_train.shape[0]

self.features = torch.from_numpy(features_train)

self.labels = torch.from_numpy(labels_train)

def __getitem__(self, index):

return self.features[index], self.labels[index]

def __len__(self):

return self.len

class test_dataset(Dataset):

def __init__(self):

self.len = features.shape[0]

self.features = torch.from_numpy(features_test)

self.labels = torch.from_numpy(labels_test)

def __getitem__(self, index):

return self.features[index], self.labels[index]

def __len__(self):

return self.len

train_dataset = train_dataset()

test_dataset = test_dataset()

# Training settings

batch_size = 20

n_iters = 3000

num_epochs = n_iters / (len(train_dataset) / batch_size)

num_epochs = int(num_epochs)

#------------------------------------------------------------------------------

'''

STEP 2: MAKING DATASET ITERABLE

'''

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=True,

drop_last=True)

test_loader = torch.utils.data.DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=False,

drop_last=True)

'''

STEP 3: CREATE MODEL CLASS

'''

class RNNModel(nn.Module):

def __init__(self, input_dim, hidden_dim, layer_dim, output_dim):

super(RNNModel, self).__init__()

# Hidden dimensions

self.hidden_dim = hidden_dim

# Number of hidden layers

self.layer_dim = layer_dim

# Building your RNN

# batch_first=True causes input/output tensors to be of shape

# (batch_dim, seq_dim, feature_dim)

self.rnn = nn.RNN(input_dim, hidden_dim, layer_dim, batch_first=True, nonlinearity='tanh')

# Readout layer

self.fc = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

# Initialize hidden state with zeros

h0 = Variable(torch.zeros(self.layer_dim, x.size(0), self.hidden_dim))

# One time step

out, hn = self.rnn(x, h0)

out = self.fc(out[:, -1, :])

# out.size() --> 100, 10

return out

'''

STEP 4: INSTANTIATE MODEL CLASS

'''

input_dim = 20

hidden_dim = 69

layer_dim = 1

output_dim = 10

model = RNNModel(input_dim, hidden_dim, layer_dim, output_dim)

'''

STEP 5: INSTANTIATE LOSS CLASS

'''

criterion = nn.CrossEntropyLoss()

'''

STEP 6: INSTANTIATE OPTIMIZER CLASS

'''

learning_rate = 0.1

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

'''

STEP 7: TRAIN THE MODEL

'''

# Number of steps to unroll

seq_dim = 12

for i, (features, labels) in enumerate(train_loader):

features=features.type(torch.FloatTensor)

features = Variable(features)

#labels = labels.view(-1)

labels = labels.type(torch.LongTensor)

labels = Variable(labels)

# Clear gradients w.r.t. parameters

optimizer.zero_grad()

# Forward pass to get output/logits

# outputs.size() --> 100, 10

outputs = model(features)

# Calculate Loss: softmax --> cross entropy loss

loss = criterion(outputs, labels)

# Getting gradients w.r.t. parameters

loss.backward()

# Updating parameters

optimizer.step()