It appears that for CrossEntropyLoss, .to() doesn’t copy the weight to the same device. Would be great to know is this by-design or a bug that is fixed on newer releases?

Minimum example from the usage doc:

>>> loss = nn.CrossEntropyLoss(weight=torch.FloatTensor([1, 2, 3])).cuda()

>>> input = torch.randn(3, 5, requires_grad=True).cuda()

>>> target = torch.empty(3, dtype=torch.long).random_(5).cuda()

>>> output = loss(input, target)

It will throw the following exception:

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cpu and cuda:0!

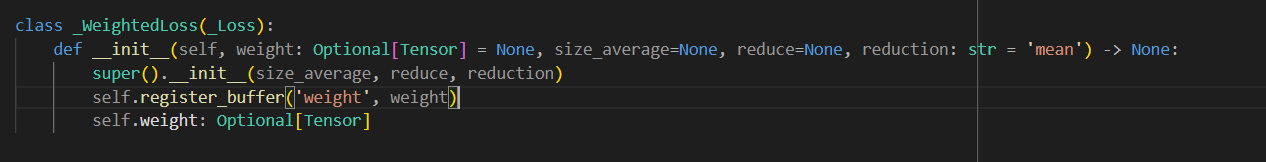

Looking at the _WeightedLoss module, weight parameter is registered into the buffer. I assume it should be copied to same device when .to() is called from the loss module?