I know that the CrossEntropyLoss requires logits.

My problem is that from what I learnt from textbook or from courses, for each data sample, cross entropy should calculate for each output node then sum them up.

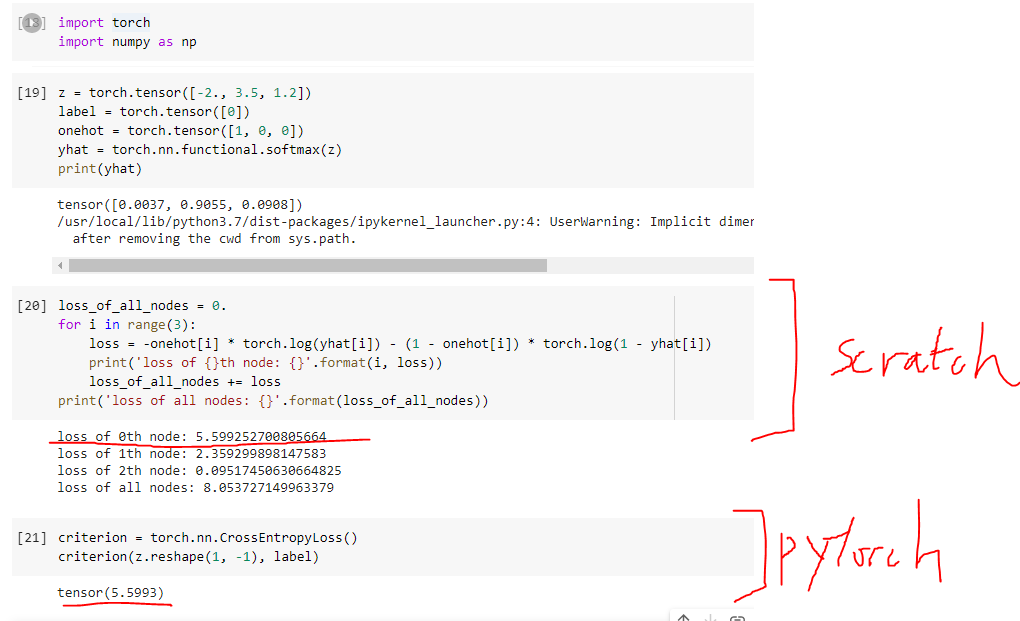

The following assumes that we have one data sample passed to the network and we have the output logit z vector. We have three classes. The label for this data sample is 0.

Why it only calculates for the node of the label’s class? but not summing up all the losses of each output node?