They take weights into account, bias are not included, for exact calculation, bias should be included for memory size calculation, is that right?

Yes, for the exact calculation you could add the bias shape, but it can be skipped as it’s usually a small overhead.

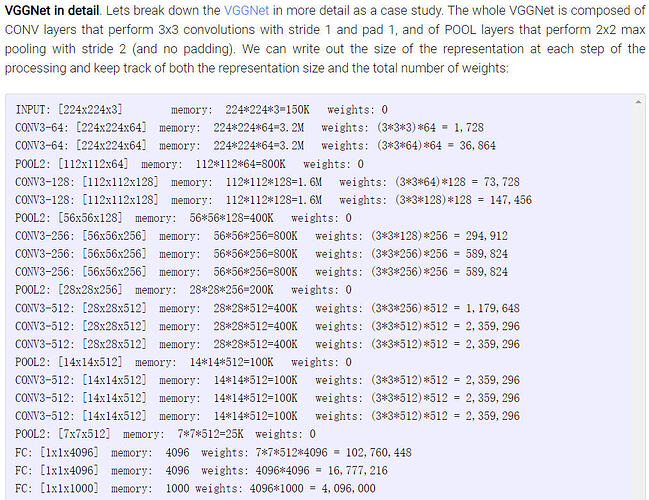

E.g. the first conv layer:

conv = nn.Conv2d(3, 64, 3, padding=1)

would create an activation shape of [batch_size, 64, 224, 224] = 3211264*batch_size elements, has a weight of [64, 3, 3, 3] = 1728 elements and a bias of [64] = 64 elements.

Given this large difference you can decide if the exact calculation would yield any important information.

Note that the later layers have a larger difference of weight/bias.

E.g.:

conv = nn.Conv2d(256, 512, 3)

print(conv.weight.nelement())

# 1179648

print(conv.bias.nelement())

# 512

print(conv.bias.nelement()/conv.weight.nelement())

# 0.00043402777777777775

it is so convenient to compute size of weights and bias by using Pytorch, great