Hello, I am working on a task that involves speech recognition using CTC Loss.

It’s no different, I use pytorch’s CTC Loss, but the CTC Loss value continues to be derived only as inf.

I tried setting the option to zero_infinity=True, but the value is very small and training does not proceed.

What should I do in this case? I’ve tried many different solutions, but I don’t know why it works this way.

I also found an article that says it’s a length related reason, currently the target length is smaller than the input length, most likely the input length is larger.

I really don’t know why.

I would really appreciate your advice.

Below is my code.

criterion_CTC = nn.CTCLoss(blank=0, reduction='mean', zero_infinity=False).to(device)

and in train phase,

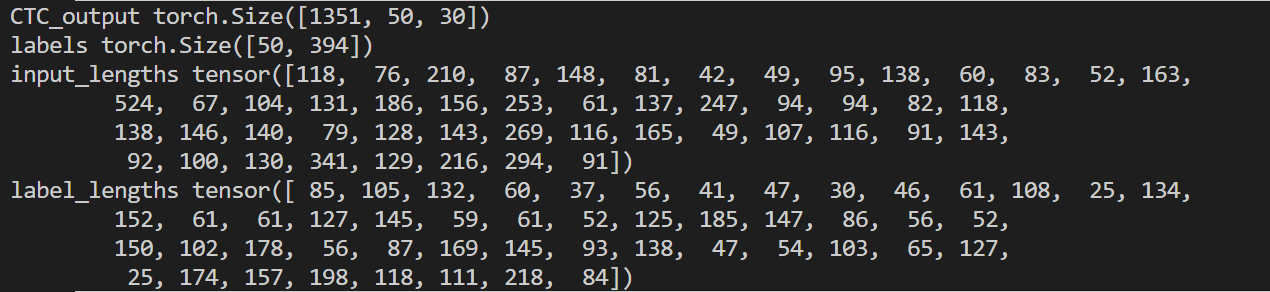

CTC_output, LM_output = model(input_embeddings_batch, input_lengths, input_attn_mask_invert_batch, input_attn_mask_batch, target_ids_batch) CTC_output = F.log_softmax(CTC_output, dim=2) CTC_output = CTC_output.transpose(0, 1) # [113, 80, 27] # [sequence, batch, cls probability] => 입력 시퀀스가 목표 시퀀스에 정렬되기에 짧으면, infinite 문제 발생 print('CTC_output', CTC_output.size())# 1351, 80, 29 print('labels', labels.size()) # 80, 394 print('input_lengths', input_lengths) #80 print('label_lengths', label_lengths) #80

each component shape and results is shown in below.

Please comment to me… I will very thank you…