Firstly this is my environment:

- one RTX2060 card;

- Debian buster;

- CUDA 9.2 driver installed from Debian’s apt repository.

- Python 3.6 and pytorch installed by anaconda

- I have tried a tiny GPU run in pytorch and it works.

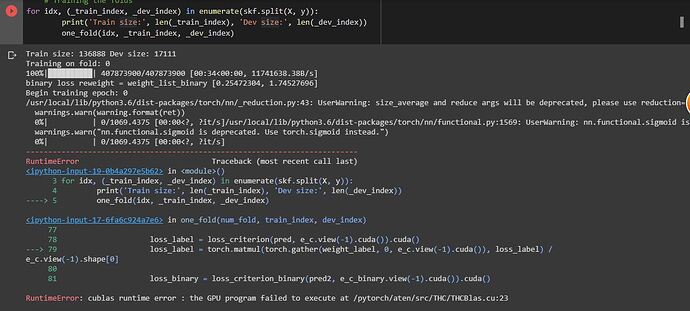

The problem is: the network works on CPU, but when I try to put it on GPU, it claims:

Traceback (most recent call last):

File "./do_training.py", line 95, in <module>

batch_output = model(batch_input)

File "/opt/anaconda3/lib/python3.6/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/large/yangxi/MonotoneLearn/train_for_pitch/MyModel.py", line 32, in forward

x = self.full_part.forward(x)

File "/opt/anaconda3/lib/python3.6/site-packages/torch/nn/modules/container.py", line 92, in forward

input = module(input)

File "/opt/anaconda3/lib/python3.6/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/opt/anaconda3/lib/python3.6/site-packages/torch/nn/modules/linear.py", line 92, in forward

return F.linear(input, self.weight, self.bias)

File "/opt/anaconda3/lib/python3.6/site-packages/torch/nn/functional.py", line 1406, in linear

ret = torch.addmm(bias, input, weight.t())

RuntimeError: cublas runtime error : the GPU program failed to execute at /opt/conda/conda-bld/pytorch_1556653183467/work/aten/src/THC/THCBlas.cu:259

This error can appear when tensor dimension mismatch. But the whole thing works on CPU, so it won’t have unmatched tensor size.

This is my source code:

do_training.py

#!/opt/anaconda3/bin/python3

import pysndfile

import optparse

import sys

import os

import re

import math

import random

import numpy

import fnmatch

import h5py

import torch

import MyModel

# parse options

opt_parser = optparse.OptionParser()

opt_parser.add_option("-d", "--data", dest="f_data", help="HDF5 file contains training data, with \"input\" and \"refout\" dataset.", metavar="FILE")

opt_parser.add_option("-i", "--in", dest="f_in", help="Existing network file to continue training.", metavar="FILE")

opt_parser.add_option("-c", "--conv-layers", dest="n_conv_layers", help="Number of 3-length convolution layers for creating new network.", type="int", metavar="NUM_LAYER")

opt_parser.add_option("-f", "--full-layers", dest="n_full_layers", help="Number of fully connected layers for creating new network.", type="int", metavar="NUM_LAYER")

opt_parser.add_option("-o", "--out", dest="f_out", help="Output file to store trained network.", metavar="FILE")

opt_parser.add_option("-t", "--iter", dest="n_iter", help="Number of training iterations to perform.", type="int", metavar="NUM_ITER", default=1000)

opt_parser.add_option("-b", "--batch", dest="batch_sz", help="Size of each training iteration.", type="int", metavar="BATCH_SIZE", default=10)

opt_parser.add_option("-D", "--device", dest="dev_type", help="Device. Default to CUDA when available, or CPU when no GPU is available.")

options,_ = opt_parser.parse_args()

if options.f_data is None:

print("data file is not specified")

exit(-1)

if options.f_in is None and options.n_full_layers is None:

print("neither input file nor layer number is specified")

exit(-1)

if options.f_out is None:

print("output file is not specified")

exit(-1)

# determine device

if options.dev_type is None:

if torch.cuda.is_available():

device = torch.device('cuda:0')

else:

device = torch.device('cpu')

else:

device = torch.device(options.dev_type)

# read data properties

fh_data = h5py.File(options.f_data, 'r')

if "input" not in fh_data:

print("no input in data file %s" % options.f_data)

exit(-1)

if "refout" not in fh_data:

print("no refout in data file %s" % options.f_data)

exit(-1)

if len(fh_data["input"]) != len(fh_data["refout"]):

print("inequal number of data: input %d, ref output %d" % (len(fh_data["input"]), len(fh_data["refout"])))

exit(-1)

num_data = len(fh_data["input"])

input_size = len(fh_data["input"][0])

whole_input = torch.from_numpy(fh_data["input"].__array__()).to(device)

whole_refout = torch.from_numpy(fh_data["refout"].__array__()).to(device)

print(whole_input)

print(whole_refout)

# create model

model = None

if options.f_in is None:

model = MyModel.MyModel(input_size, options.n_conv_layers, options.n_full_layers).to(device)

else:

model = torch.load(options.f_in).to(device)

print(model)

print(model.parameters())

# do training

#fcri = torch.nn.NLLLoss()

cri = torch.nn.CrossEntropyLoss().to(device)

optimizer = torch.optim.SGD(model.parameters(), lr = 0.01)

batch_input = torch.empty( (options.batch_sz, input_size), dtype=torch.float32, device=device )

batch_refout = torch.empty( options.batch_sz, dtype=torch.long, device=device )

for i in range(options.n_iter):

try:

optimizer.zero_grad()

for j in range(options.batch_sz):

i_data = random.randint(0, num_data-1)

batch_input[j] = whole_input[i_data]

batch_refout[j] = whole_refout[i_data]

batch_output = model(batch_input)

loss = cri(batch_output, batch_refout)

if i % 100 == 0:

print( "iter %d: loss %f" % (i, float(loss)) )

loss.backward()

optimizer.step()

except KeyboardInterrupt:

print("early out by int at iter %d" % i)

break

# save result

torch.save(model, options.f_out)

MyModel.py:

import torch

import collections

class MyModel(torch.nn.Module):

def __init__(self, input_sz, n_conv_layer, n_full_layer):

super(MyModel, self).__init__()

self.input_size = input_sz

conv_layers = collections.OrderedDict()

for i in range(n_conv_layer):

conv_name = "conv_%d" % i

conv_layers[conv_name] = torch.nn.Conv1d(1,1,3,padding=1)

self.conv_part = torch.nn.modules.Sequential(conv_layers)

full_layers = collections.OrderedDict()

for i in range(n_full_layer-1):

full_conn_name = "full_conn_%d" % i

non_linear_name = "acti_%d" % i

full_layers[full_conn_name] = torch.nn.Linear(input_sz,input_sz)

full_layers[non_linear_name] = torch.nn.Tanh()

full_layers["last_full_conn"] = torch.nn.Linear(input_sz, 128)

#full_layers["last_acti"] = torch.nn.LogSoftmax()

self.full_part = torch.nn.modules.Sequential(full_layers)

def forward(self, x):

print("input shape ",x.size())

batch_sz = len(x)

x = x.view(batch_sz, 1, self.input_size)

x = self.conv_part.forward(x)

print("convolution part output shape ",x.size())

x = x.view(batch_sz, self.input_size)

print("full connect part input shape ",x.size())

x = self.full_part.forward(x)

return x

I have put some logs on forward function. Before it is dead, I can see outputs:

input shape torch.Size([10, 1025])

convolution part output shape torch.Size([10, 1, 1025])

full connect part input shape torch.Size([10, 1025])

Which is expected.