I am using torch.compile for the fastNLP_Bert model.

The logs show the following:

V1010 11:47:33.109000 130925214240768 torch/_inductor/graph.py:1500] [5/0] Output code written to: /tmp/torchinductor_abhishek/xy/cxymc6c7zr6k5kpcynp3sgouehmub6kqljk3rhigwfluxnwqmiqp.py

V1010 11:47:33.110000 130925214240768 torch/_inductor/compile_fx.py:481] [5/0] FX codegen and compilation took 4.754s

When I try to execute the output code’s python script:

/tmp/torchinductor_abhishek/xy$ python3 cxymc6c7zr6k5kpcynp3sgouehmub6kqljk3rhigwfluxnwqmiqp.py

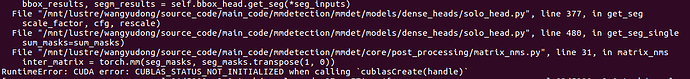

Traceback (most recent call last):

File "/tmp/torchinductor_abhishek/xy/cxymc6c7zr6k5kpcynp3sgouehmub6kqljk3rhigwfluxnwqmiqp.py", line 1718, in <module>

compiled_module_main('fastNLP_Bert', benchmark_compiled_module)

File "/home/abhishek/pytorch-benchmarks/lib/python3.10/site-packages/torch/_inductor/wrapper_benchmark.py", line 283, in compiled_module_main

wall_time_ms = benchmark_compiled_module_fn(times=times, repeat=repeat) * 1000

File "/tmp/torchinductor_abhishek/xy/cxymc6c7zr6k5kpcynp3sgouehmub6kqljk3rhigwfluxnwqmiqp.py", line 1713, in benchmark_compiled_module

return print_performance(fn, times=times, repeat=repeat)

File "/home/abhishek/pytorch-benchmarks/lib/python3.10/site-packages/torch/_inductor/utils.py", line 331, in print_performance

timings = torch.tensor([timed(fn, args, times, device) for _ in range(repeat)])

File "/home/abhishek/pytorch-benchmarks/lib/python3.10/site-packages/torch/_inductor/utils.py", line 331, in <listcomp>

timings = torch.tensor([timed(fn, args, times, device) for _ in range(repeat)])

File "/home/abhishek/pytorch-benchmarks/lib/python3.10/site-packages/torch/_inductor/utils.py", line 320, in timed

result = model(*example_inputs)

File "/tmp/torchinductor_abhishek/xy/cxymc6c7zr6k5kpcynp3sgouehmub6kqljk3rhigwfluxnwqmiqp.py", line 1712, in <lambda>

fn = lambda: call([arg0_1, arg1_1, arg2_1, arg3_1, arg4_1, arg5_1, arg6_1, arg7_1, arg8_1, arg9_1, arg10_1, arg11_1, arg12_1, arg13_1, arg14_1, arg15_1, arg16_1, arg17_1, arg18_1, arg19_1, arg20_1, arg21_1, arg22_1, arg23_1, arg24_1, arg25_1, arg26_1, arg27_1, arg28_1, arg29_1, arg30_1, arg31_1, arg32_1, arg33_1, arg34_1, arg35_1, arg36_1, arg37_1, arg38_1, arg39_1, arg40_1, arg41_1, arg42_1, arg43_1, arg44_1, arg45_1, arg46_1, arg47_1, arg48_1, arg49_1, arg50_1, arg51_1, arg52_1, arg53_1, arg54_1, arg55_1, arg56_1, arg57_1, arg58_1, arg59_1, arg60_1, arg61_1, arg62_1, arg63_1, arg64_1, arg65_1, arg66_1, arg67_1, arg68_1, arg69_1, arg70_1, arg71_1, arg72_1, arg73_1, arg74_1, arg75_1, arg76_1, arg77_1, arg78_1, arg79_1, arg80_1, arg81_1, arg82_1, arg83_1, arg84_1, arg85_1, arg86_1, arg87_1, arg88_1, arg89_1, arg90_1, arg91_1, arg92_1, arg93_1, arg94_1, arg95_1, arg96_1, arg97_1, arg98_1, arg99_1, arg100_1, arg101_1, arg102_1, arg103_1, arg104_1, arg105_1, arg106_1, arg107_1, arg108_1, arg109_1, arg110_1, arg111_1, arg112_1, arg113_1, arg114_1, arg115_1, arg116_1, arg117_1, arg118_1, arg119_1, arg120_1, arg121_1, arg122_1, arg123_1, arg124_1, arg125_1, arg126_1, arg127_1, arg128_1, arg129_1, arg130_1, arg131_1, arg132_1, arg133_1, arg134_1, arg135_1, arg136_1, arg137_1, arg138_1, arg139_1, arg140_1, arg141_1, arg142_1, arg143_1, arg144_1, arg145_1, arg146_1, arg147_1, arg148_1, arg149_1, arg150_1, arg151_1, arg152_1, arg153_1, arg154_1, arg155_1, arg156_1, arg157_1, arg158_1, arg159_1, arg160_1, arg161_1, arg162_1, arg163_1, arg164_1, arg165_1, arg166_1, arg167_1, arg168_1, arg169_1, arg170_1, arg171_1, arg172_1, arg173_1, arg174_1, arg175_1, arg176_1, arg177_1, arg178_1, arg179_1, arg180_1, arg181_1, arg182_1, arg183_1, arg184_1, arg185_1, arg186_1, arg187_1, arg188_1, arg189_1, arg190_1, arg191_1, arg192_1, arg193_1, arg194_1, arg195_1, arg196_1, arg197_1, arg198_1, arg199_1, arg200_1, arg201_1])

File "/tmp/torchinductor_abhishek/xy/cxymc6c7zr6k5kpcynp3sgouehmub6kqljk3rhigwfluxnwqmiqp.py", line 722, in call

extern_kernels.mm(reinterpret_tensor(buf4, (486400, 768), (768, 1), 0), reinterpret_tensor(arg5_1, (768, 768), (1, 768), 0), out=buf5)

RuntimeError: CUDA error: CUBLAS_STATUS_NOT_INITIALIZED when calling `cublasCreate(handle)`

(pytorch-benchmarks) abhishek@chisel-7:/tmp/torchinductor_abhishek/xy$

I get the above error. I guess the output code be run standalone (I have tried out with other output codes without having extern_kernels call and they work)

Note: The full torch.compiled application work fine.