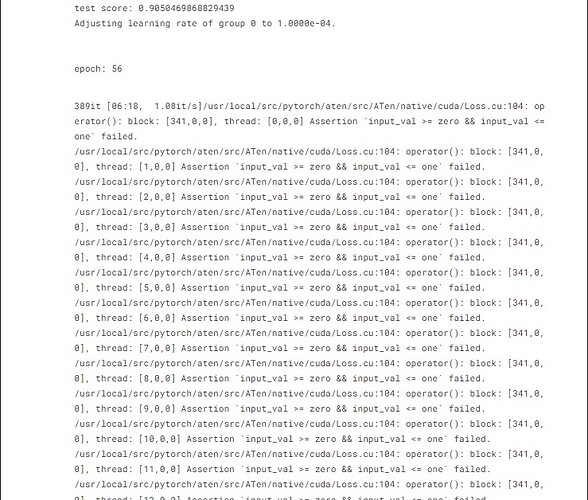

So my model was training and converging just fine for both training and testing cases and suddenly at the 56th epoch it threw a device-side assert triggered error.

Here’s an image:

I’m performing binary segmentation task.

I have checked and confirmed that my input values to the loss function are indeed within the range of (0, 1) as the final layer of my segmentation network has a sigmoid function.

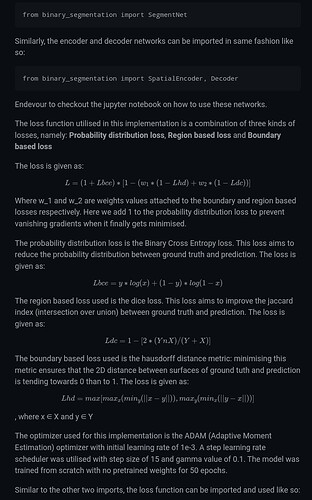

I devised a loss function specific to my problem after some research, the loss function is a combination of BCEloss, hausdorff distance and dice loss like so:

both input and output of the model are float32 precision.

Initially I presumed that the model might be outputting NaN values, but then again if it did, the error would be pointing towards the loss computing function and not the batch_loss.backward() method.

I tried to reproduce this error again but with just a few 100 samples because it takes hours for this model to train with all samples and get to this point, but with the few 100 samples it ran fine and didn’t encounter any error.

Has anyone experienced this before?

What could be the issue? @ptrblck