How to solve the above problems? Thanks!!

The error shouldn’t be raised, if your device has enough memory.

Could you explain a bit more why you think there is enough memory and how you’ve estimated the memory requirement needed in your script?

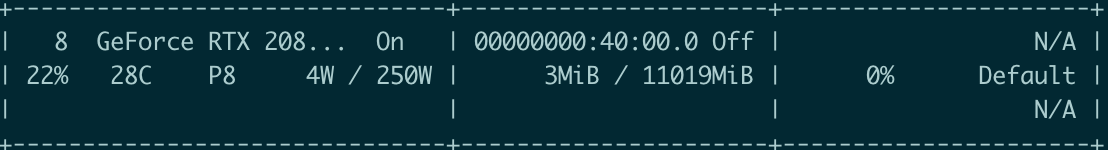

I confirmed that gpu-8 is not used through the nvidia-smi command.

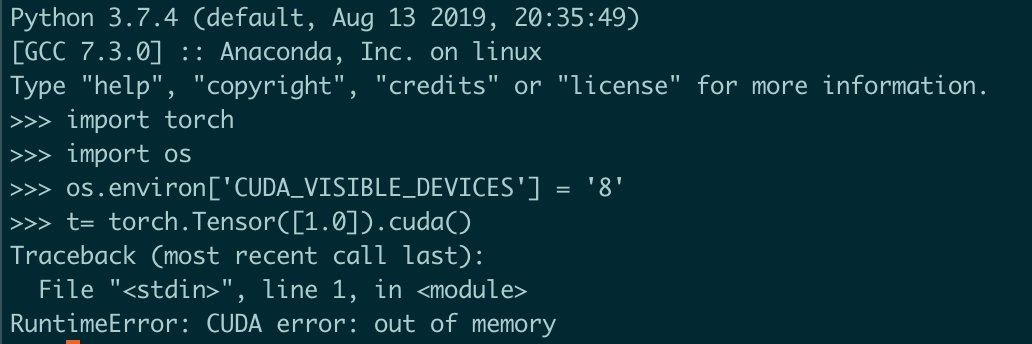

I just created a simple torch.Tensor([1.0]).cuda() will display “RuntimeError: CUDA error: out of memory”.

@ptrblck

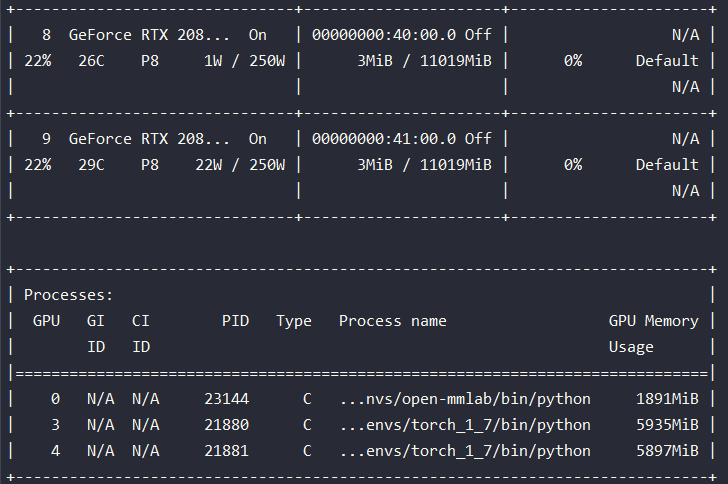

I use the nvidia-smi, the output is as follows:

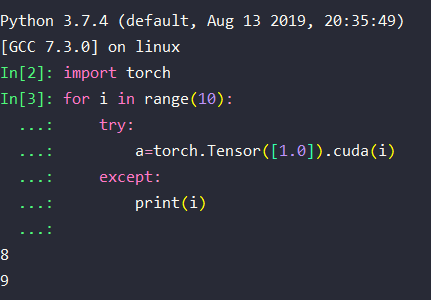

Then, I tried to creat a tensor on gpu with

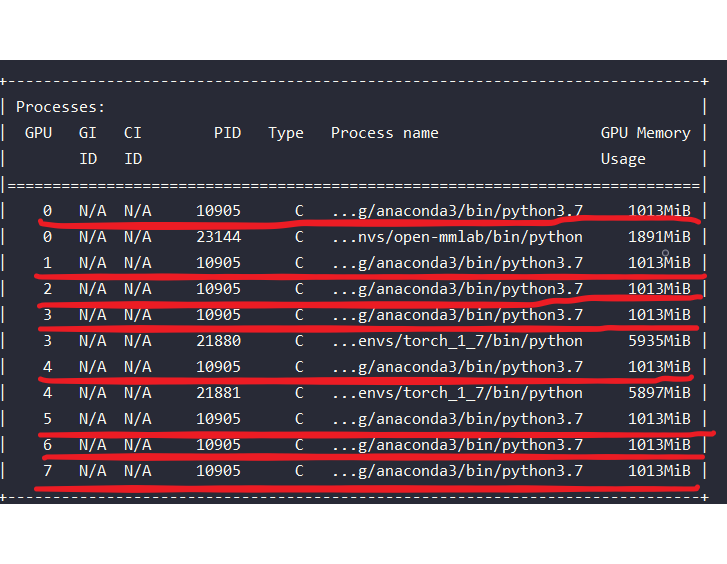

It can be seen that gpu-0 to gpu-7 can successfully apply for tensor, but gpu-8 and gpu-9 will have a “cuda out of memory” error, even if there is sufficient memory.