I am trying to run a simple RNN model with LSTM unit but I am getting cuda error (same code is working fine with CPU)

Rnn model is like below

class BiRNN(nn.Module):

...

...

def forward(self, x):

# Set initial states

h0 = Variable(torch.zeros(self.num_layers*2, x.size(0), self.hidden_size)) # 2 for bidirection

c0 = Variable(torch.zeros(self.num_layers*2, x.size(0), self.hidden_size))

# Forward propagate RNN

out, _ = self.lstm(x, (h0, c0))

# Decode hidden state of last time step

out = self.fc(out[:, -1, :])

return out

And when I tried to call feed forward:

rnn = BiRNN(input_size, hidden_size, num_layers)

rnn.cuda()

# Loss and Optimizer

criterion = nn.MSELoss()

optimizer = torch.optim.Adam(rnn.parameters(), lr=learning_rate)

for epoch in range(100):

for data in zip(train_loader_x,train_loader_y):

#Forward + Backward + Optimize

optimizer.zero_grad()

#X_train_ = np.reshape(data[0], (data[0].shape[0], data[0].shape[1], 1))# as dimenion is 2

X_train_ = Variable(data[0].float().cuda())

temp = data[1].float().cuda()

labels = Variable(temp.view(temp.numel(),1))

outputs = rnn(X_train_)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

print("Loss is {}".format(loss.data[0]))

I am getting error:

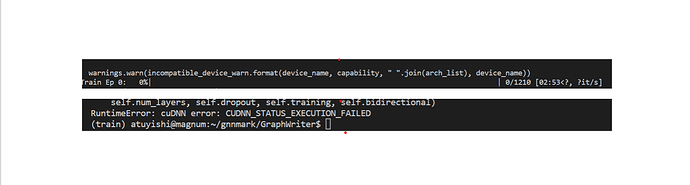

RuntimeError: CUDNN_STATUS_EXECUTION_FAILED

File "D:\Users\Saurabh\Anaconda2\envs\pytorch_gpu1\lib\site-packages\spyder\utils\site\sitecustomize.py", line 705, in runfile

execfile(filename, namespace)

File "D:\Users\Saurabh\Anaconda2\envs\pytorch_gpu1\lib\site-packages\spyder\utils\site\sitecustomize.py", line 102, in execfile

exec(compile(f.read(), filename, 'exec'), namespace)

File "D:/Users/Saurabh/Documents/thesis/codes/RNN_power_predict_temp.py", line 118, in <module>

outputs = rnn(X_train_)

File "D:\Users\Saurabh\Anaconda2\envs\pytorch_gpu1\lib\site-packages\torch\nn\modules\module.py", line 491, in __call__

result = self.forward(*input, **kwargs)

File "D:/Users/Saurabh/Documents/thesis/codes/RNN_power_predict_temp.py", line 96, in forward

out, _ = self.lstm(x, (h0, c0))

File "D:\Users\Saurabh\Anaconda2\envs\pytorch_gpu1\lib\site-packages\torch\nn\modules\module.py", line 491, in __call__

result = self.forward(*input, **kwargs)

File "D:\Users\Saurabh\Anaconda2\envs\pytorch_gpu1\lib\site-packages\torch\nn\modules\rnn.py", line 192, in forward

output, hidden = func(input, self.all_weights, hx, batch_sizes)

File "D:\Users\Saurabh\Anaconda2\envs\pytorch_gpu1\lib\site-packages\torch\nn\_functions\rnn.py", line 323, in forward

return func(input, *fargs, **fkwargs)

File "D:\Users\Saurabh\Anaconda2\envs\pytorch_gpu1\lib\site-packages\torch\nn\_functions\rnn.py", line 287, in forward

dropout_ts)

RuntimeError: CUDNN_STATUS_EXECUTION_FAILED

I tried some solutions as discussed here 1,2 but still, i got the same error. Also, I tried to run a sample program and it is working fine on the GPU

I am using Windows 10, Nvidia GeForce GTX 1050 Ti, cuda 9.1, and pytorch version is 0.4.0

Whole program is here https://github.com/saurbkumar/rnn_test/blob/master/test.py