I am try to finetune modified version of Blip2 model using a custom dataset. I am using mixed precision when training. As I found out error occurs here pooled_feature_map = adaptive_pool(reshaped_feature_map)

# Reshape the upsampled feature map to combine the channel and spatial dimensions

reshaped_feature_map = upsampled_feature_map.view(batch_size, 1280 * 32 * 32).float()

reshaped_feature_map = reshaped_feature_map.contiguous()

# Adaptive Pooling to match the target number of elements (257 * 1408)

adaptive_pool = nn.AdaptiveAvgPool1d(257 * 1408)

with autocast(enabled=False): # Force full precision

#pooled_tensor = adaptive_avg_pooling_layer(input_tensor)

pooled_feature_map = adaptive_pool(reshaped_feature_map)

#pooled_feature_map = adaptive_pool(reshaped_feature_map.float())

pooled_feature_map = pooled_feature_map.half()

# Final reshape to the target shape (257, 1408)

image_embeds = pooled_feature_map.view(batch_size, 257, 1408)

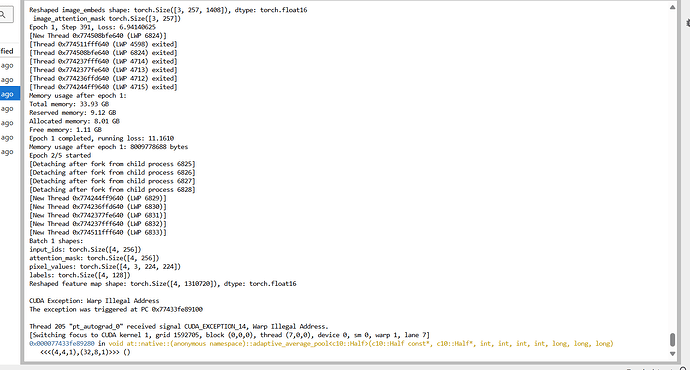

Error found by debugging

Epoch 1, Step 1, Loss: 14.890625

[New Thread 0x7af8253ff640 (LWP 7201)]

Batch 2 shapes:

input_ids: torch.Size([8, 256])

attention_mask: torch.Size([8, 256])

pixel_values: torch.Size([8, 3, 224, 224])

labels: torch.Size([8, 128])

CUDA Exception: Warp Illegal Address

The exception was triggered at PC 0x7af699eb1230

Thread 205 “pt_autograd_0” received signal CUDA_EXCEPTION_14, Warp Illegal Address.

[Switching focus to CUDA kernel 5, grid 5392, block (0,0,0), thread (7,0,0), device 0, sm 0, warp 1, lane 7]

0x00007af699eb1370 in void at::native::(anonymous namespace)::adaptive_average_pool(float const*, float*, int, int, int, int, long, long, long)<<<(8,2,1),(32,8,1)>>> ()